The relentless pursuit of technological solutions in medicine has positioned artificial intelligence as a potential game-changer in the fight against breast cancer, with much of the excitement centered on developing ever-more powerful and complex algorithms. However, a growing body of evidence suggests that the true frontier for improving diagnostic accuracy may lie not in the sophistication of the algorithm itself, but in the meticulous preparation of the data it consumes. This foundational step, known as pre-processing, is emerging from the background to take center stage, compelling researchers and clinicians to reconsider where the most significant gains in AI-driven healthcare can be made. The quality of the input dictates the quality of the output, and in a field where precision can mean the difference between life and death, perfecting this initial phase could be the most critical piece of the puzzle.

The Unseen Foundation of AI Performance

Beyond the Algorithm The Critical Role of Pre-Processing

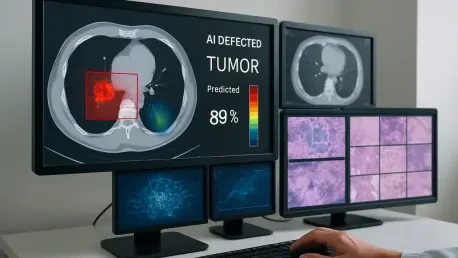

Deep learning models, for all their complexity, are fundamentally dependent on the clarity and consistency of the data they are trained on. Pre-processing encompasses a suite of techniques designed to refine raw medical images before they are introduced to an AI system. These methods include noise reduction, which filters out random disturbances or artifacts from the imaging process; contrast enhancement, which makes the boundaries between different tissue types more distinct; and image normalization, which standardizes brightness and intensity levels across a diverse set of scans. By performing this digital cleanup, pre-processing ensures that the AI algorithm learns to identify genuine pathological features rather than being misled by irrelevant technical variations that can arise from different machines, operators, or patient-specific factors. This careful curation of data is not merely a preliminary task but a strategic intervention that directly elevates the performance and reliability of the diagnostic model.

The impact of these preparatory steps on the accuracy of breast tissue segmentation is both direct and substantial. When an AI model is fed raw, unprocessed images, it may struggle to differentiate between subtle cancerous legions and benign artifacts, leading to a higher rate of false positives or, more dangerously, false negatives. Effective pre-processing mitigates these risks by creating a standardized, high-fidelity dataset that faithfully represents the underlying biological structures. This allows the AI to develop a more robust and generalizable understanding of what constitutes abnormal tissue. Consequently, the resulting segmentation—the process of outlining specific regions of interest, such as tumors—becomes significantly more precise. This improvement in segmentation accuracy is the cornerstone of a more effective diagnostic pipeline, enabling the AI to provide clinicians with clearer, more reliable insights to guide their decision-making process and ultimately improve patient outcomes.

The Necessity of a Context Dependent Strategy

A crucial finding in the study of AI diagnostics is the definitive rejection of a “one-size-fits-all” mentality when it comes to pre-processing. The optimal combination of image enhancement techniques is not universal; instead, it is highly dependent on the specific context of the clinical data. Factors such as the imaging modality being used—whether it is mammography, magnetic resonance imaging (MRI), or ultrasound—play a significant role, as each technology has its own unique characteristics and potential for artifacts. For example, techniques that are effective for reducing speckle noise in ultrasound images may be less relevant for enhancing contrast in mammograms. This variability necessitates a tailored approach, where the pre-processing pipeline is carefully designed and fine-tuned to match the specific dataset being analyzed, turning what was once seen as a routine step into a nuanced and strategic discipline.

This context-dependent nature of pre-processing has profound implications for the development and deployment of AI solutions in healthcare. It underscores the need for adaptable, intelligent systems that can be calibrated for different clinical environments and patient populations. Developing such systems requires a deep understanding of both the computer science behind the algorithms and the medical physics of the imaging technologies. It shifts the focus from creating a single, monolithic AI model to building a flexible framework that can be optimized for specific diagnostic tasks. This adaptability is essential for creating robust and reliable tools that can deliver consistent performance in the unpredictable and diverse conditions of real-world medical practice. By embracing this tailored methodology, the field moves closer to realizing AI systems that are not just powerful in theory but are also practical and effective in the clinic.

Translating a Concept into Clinical Reality

Empowering Clinicians and Improving Patient Outcomes

The ultimate goal of refining AI through superior pre-processing is to create a tangible, positive impact on clinical workflows and patient care. By significantly enhancing the quality and consistency of input images, these techniques enable deep learning models to function as a highly reliable “second opinion” for radiologists. This AI-powered assistance can help clinicians detect subtle abnormalities that might be missed by the human eye, particularly in high-volume settings where fatigue can be a factor. The result is a more efficient and accurate diagnostic process, allowing for quicker and more confident decision-making. This augmentation of human expertise can lead to a reduction in diagnostic errors and, most importantly, facilitate the earlier detection of breast cancer, which is a critical factor in improving survival rates and treatment outcomes for patients around the world.

However, realizing these clinical benefits is not a task that can be accomplished by technologists working in isolation. The development of effective AI diagnostic tools hinges on deep, synergistic collaboration between multiple disciplines. Computer scientists who build the algorithms must work hand-in-hand with medical professionals who provide the essential clinical context and an understanding of the disease. Furthermore, imaging experts who are intimately familiar with the physics and limitations of technologies like MRI and mammography are indispensable for designing effective pre-processing strategies. This interdisciplinary partnership ensures that the technological solutions being created are not only technically sound but also clinically relevant and seamlessly integrated into existing medical workflows. This synthesis of knowledge is the primary engine for driving meaningful and sustainable innovation in the ongoing fight against breast cancer.

Building a Foundation of Trust Through Rigorous Validation

For any AI-driven technology to gain acceptance and be integrated into standard medical practice, it must be built upon an unwavering foundation of trust and transparency. This trust can only be earned through rigorous, comprehensive validation processes that prove the technology’s reliability and efficacy. It is insufficient to test AI models solely against idealized, clean datasets that do not reflect the complexities of clinical reality. Instead, validation must be conducted using diverse, real-world data that encompasses a wide range of patient demographics, equipment variations, and image qualities. This commitment to testing in realistic scenarios is paramount for producing outcomes that are reproducible and practically applicable. Such a robust approach is essential for gaining the confidence of the medical community, satisfying regulatory bodies, and ensuring that these tools are safe and effective for patient use.

Parallel to the technical validation, the increasing sophistication of AI in healthcare demands a thorough and proactive engagement with its ethical implications. As algorithms play a more significant role in life-or-death decisions, critical questions surrounding accuracy, accountability, and algorithmic bias come to the forefront. A significant concern is the potential for AI systems, if not carefully designed and monitored, to perpetuate or even amplify existing healthcare disparities. For instance, a model trained predominantly on data from one demographic group may perform less accurately for others. Addressing this requires a commitment to fairness and equity in the data collection and model training processes, ensuring that the benefits of this advanced technology are accessible and reliable for all segments of the population. This ethical diligence is not an afterthought but a core component of responsible innovation.

Navigating the Path to Widespread Adoption

Overcoming Practical and Ethical Hurdles

To navigate the complex ethical landscape of AI in medicine, a forward-thinking approach involves the formation of multidisciplinary task forces. These groups, comprising ethicists, technologists, legal experts, and clinicians, would be tasked with establishing clear and robust ethical guidelines. These guidelines must govern critical areas such as algorithmic transparency, data privacy and rights, and accountability when errors occur. By creating a strong ethical framework before widespread deployment, the healthcare industry can ensure that the integration of AI is managed responsibly. This proactive governance helps to build and maintain public trust, which is essential for the long-term success and acceptance of AI as a standard component of patient care. A steadfast commitment to ethical integrity ensures that technological advancement remains firmly aligned with the core mission of medicine: to heal and protect.

Beyond the ethical considerations, the practical challenges of implementation present significant hurdles to the widespread adoption of advanced AI systems. The required investment in technology, including high-performance computing infrastructure and sophisticated software, can be substantial. Furthermore, healthcare institutions must also invest in comprehensive training programs to ensure that medical staff, from radiologists to IT specialists, are equipped with the skills needed to effectively use and manage these new tools. This necessitates a careful cost-benefit analysis by providers to justify the upfront expenditure. The discussion must also extend to the vital issue of equitable access. The ultimate vision should be a future where every patient, regardless of their geographic location or socioeconomic status, can benefit from these technological advances, preventing a new digital divide in healthcare.

A Vision for Equitable and Integrated Healthcare

To catalyze broader adoption and bridge the gap between development and clinical use, strategic pilot programs and collaborations between technology companies and healthcare institutions are essential. These initiatives serve as crucial testing grounds, allowing for the real-world evaluation of AI systems in a controlled clinical environment. Such programs can demystify the technology for healthcare professionals, provide tangible evidence of its benefits in improving efficiency and accuracy, and help establish best practices for seamlessly integrating these innovative tools into existing diagnostic workflows. By demonstrating clear value and creating a roadmap for implementation, these partnerships can foster a more receptive and prepared environment for the future of AI-enhanced medical imaging, accelerating the transition from promising research to a new standard of care.

Ultimately, the comprehensive research into the role of pre-processing provided a pivotal shift in the understanding of AI in medical diagnostics. The findings conclusively demonstrated that the thoughtful application of image enhancement techniques was not merely a preparatory step but a foundational element that directly unlocked the potential of deep learning models for more reliable and efficient cancer detection. This work wove together technological breakthroughs with the critical needs for interdisciplinary collaboration, rigorous validation, and profound ethical foresight. It painted a pragmatic yet optimistic picture of a future where the synergy between advanced technology and human medical expertise, guided by a framework of ethical responsibility and a commitment to equitable access, revolutionized patient care and saved lives.