What happens when a life-saving AI tool, designed to diagnose critical conditions with pinpoint accuracy, begins to falter as the world around it evolves, and how can regulators ensure its reliability in an ever-changing medical landscape? This pressing dilemma confronts regulators as artificial intelligence reshapes healthcare. With AI medical devices becoming integral to patient care, ensuring their sustained effectiveness is a challenge that demands innovative solutions. The U.S. Food and Drug Administration (FDA) has taken a bold step by launching a public consultation to tackle this issue, inviting stakeholders to help shape the future of oversight for these dynamic technologies.

The significance of this initiative cannot be overstated. As AI systems infiltrate healthcare—from detecting early signs of disease to tailoring treatment plans—their ability to adapt to changing conditions is both a strength and a vulnerability. The FDA recognizes that, unlike traditional medical devices, AI tools can degrade in performance over time due to shifting data patterns, a phenomenon known as “data drift.” This consultation, a cornerstone of regulatory evolution, aims to establish frameworks that protect patient safety while fostering technological advancement in a rapidly changing field.

Why AI Medical Devices Pose a Unique Regulatory Challenge

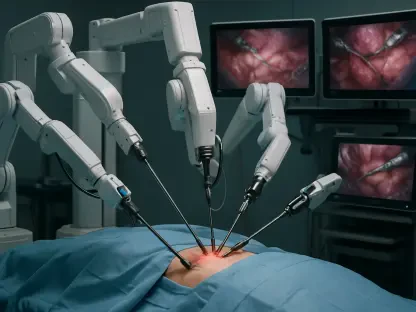

AI medical devices stand apart from their conventional counterparts due to their capacity to learn and evolve. While a traditional stethoscope or scalpel maintains consistent performance, an AI algorithm can shift in accuracy as it encounters new patient demographics or updated clinical protocols. This inherent adaptability, while innovative, creates a regulatory puzzle for the FDA, which must ensure that these tools remain reliable long after initial approval.

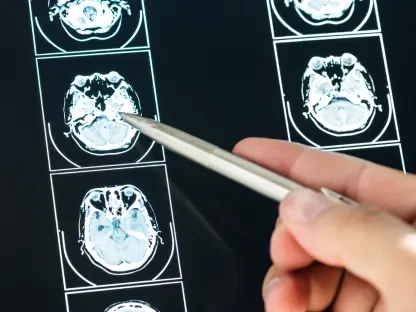

The core issue lies in the unpredictable nature of real-world environments. Pre-market testing, though rigorous, often fails to account for variables like diverse user interactions or evolving healthcare practices. A diagnostic AI, for instance, might excel in controlled trials but struggle when deployed in hospitals with different patient populations, highlighting the need for ongoing evaluation mechanisms that can detect and address performance declines.

This complexity drives the FDA’s urgency to rethink traditional oversight models. With submissions for AI-enabled devices surging—evidencing their growing role in clinical settings—the agency seeks to develop adaptive strategies that match the pace of innovation. Through public input, the goal is to create a system that anticipates changes rather than reacts to failures, safeguarding patient outcomes in an era of digital transformation.

The High Stakes of AI in Healthcare Oversight

The integration of AI into healthcare promises revolutionary outcomes, such as faster cancer detection or personalized therapies based on individual data. Yet, this promise comes with significant risks, as these systems can introduce errors if their performance erodes unnoticed. A 2025 study by a leading health tech consortium revealed that nearly 30% of AI tools showed reduced accuracy within two years of deployment due to data drift, underscoring the critical need for vigilant oversight.

Beyond technical challenges, the stakes extend to patient trust. When an AI system misdiagnoses a condition because it fails to adapt to new medical guidelines, the consequences can be dire, eroding confidence in both the technology and the healthcare system. The FDA’s focus on sustained effectiveness is not merely a bureaucratic exercise but a fundamental step to ensure that AI remains a reliable partner in medical decision-making.

Moreover, the rapid adoption of these devices amplifies the urgency. As hospitals and clinics increasingly rely on AI for critical tasks, the potential impact of performance issues grows exponentially. Regulatory oversight must evolve to match this scale, balancing the drive for innovation with the imperative to protect public health across diverse clinical landscapes.

Key Hurdles in Maintaining AI Device Performance

Diving deeper into the FDA’s concerns, several critical challenges emerge in ensuring AI medical devices perform consistently. Data drift stands out as a primary issue, where shifts in input variables—such as changes in patient age distributions or regional healthcare practices—can skew outputs. For example, an AI tool trained on urban hospital data might falter in rural settings, producing inaccurate recommendations due to unaccounted differences.

Another hurdle is the gap between pre-market testing and real-world application. Clinical trials often occur in controlled settings that don’t mirror the chaos of everyday medical practice, where user error or workflow variations can alter AI behavior. This discrepancy means that even well-vetted devices might underperform post-deployment, necessitating new methods to evaluate their effectiveness in live environments.

Lastly, existing regulatory frameworks, built for static devices, are ill-equipped to handle AI’s dynamic nature. Traditional periodic reviews fall short when algorithms continuously adapt, potentially introducing biases or errors over time. The FDA’s consultation emphasizes the need for novel post-market strategies, such as real-time monitoring, to address these gaps and ensure that AI tools remain safe and effective throughout their lifecycle.

Stakeholder Insights Driving FDA’s Strategy

To forge a path forward, the FDA has opened the floor to stakeholders, seeking real-world insights through a comprehensive consultation featuring six targeted question sets. Developers, clinicians, and researchers are sharing practical approaches, such as metrics to track AI performance and techniques to spot data drift early. One expert from a prominent medical tech firm noted, “Continuous monitoring isn’t optional; it’s the backbone of trust in AI systems, especially when lives are on the line.”

Feedback from the field reveals both successes and cautionary tales. In one hospital, an AI imaging tool adapted seamlessly to updated protocols through regular data updates, maintaining high accuracy. Conversely, another facility reported a diagnostic AI failing to adjust to a new patient demographic, leading to delayed treatments—a stark reminder of the need for robust oversight mechanisms.

This collaborative approach underscores a shared belief that no single entity can solve these challenges alone. By integrating diverse perspectives, the FDA aims to craft policies that reflect actual clinical experiences, ensuring that guidelines are not only theoretical but also actionable. The emphasis on real-world evidence signals a shift toward pragmatic regulation that can keep pace with AI’s rapid evolution.

Practical Steps to Ensure AI Effectiveness

Turning challenges into solutions requires concrete strategies, and the FDA’s consultation highlights several actionable paths. Establishing standardized performance metrics tailored to AI’s adaptive nature is a starting point. Metrics focusing on input-output data shifts can help identify when a device’s reliability begins to wane, providing early warnings for intervention.

Additionally, scalable post-market monitoring systems are essential. Leveraging real-world data from hospitals and clinics can enable regulators and developers to detect issues in real time, rather than relying on outdated snapshots. For instance, automated alerts for performance dips could prompt immediate reassessments, minimizing risks to patients.

Finally, clear triggers for reevaluation, coupled with sustained collaboration among stakeholders, can keep AI systems aligned with safety standards. Defining when an algorithm needs updating—whether due to new clinical guidelines or demographic shifts—ensures timely action. By fostering ongoing dialogue with the medtech community, the FDA can refine these strategies, striking a balance between innovation and accountability in this transformative space.

Reflecting on a Path Forward

Looking back, the FDA’s initiative to engage the public in shaping oversight for AI medical devices marked a pivotal moment in healthcare regulation. The collaborative effort brought to light the intricate balance between harnessing AI’s potential and mitigating its risks, ensuring that patient safety remained at the forefront of technological progress.

As discussions unfolded, the emphasis on real-world data and continuous monitoring emerged as a cornerstone for future policies. These insights paved the way for adaptive frameworks that could evolve alongside AI technologies, offering a blueprint for other sectors grappling with similar challenges.

Moving ahead, the focus shifted toward implementing these strategies with precision. Stakeholders were encouraged to prioritize scalable solutions, advocate for standardized metrics, and maintain open channels of communication with regulators. This collective resolve laid a strong foundation for ensuring that AI in healthcare not only thrived but did so responsibly, protecting lives in an ever-changing digital era.