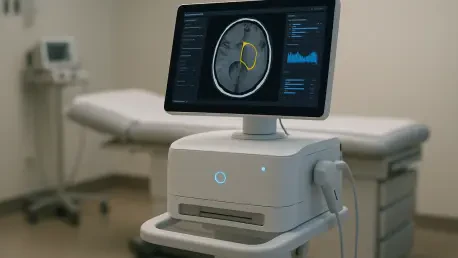

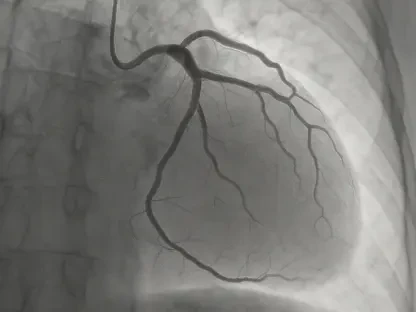

The U.S. Food and Drug Administration (FDA) finds itself navigating a complex and fractured landscape as it endeavors to craft a regulatory framework for the real-world performance of medical devices driven by artificial intelligence (AI) and machine learning (ML). Following a call for public input, the agency was met with a flood of over 100 comments, revealing a stark chasm between the perspectives of three key stakeholder groups: the medical device industry, frontline healthcare providers, and the patients whose lives these technologies impact. At the heart of this debate is a fundamental disagreement over how to ensure the ongoing safety and efficacy of increasingly sophisticated tools, including generative AI, and how to mitigate the inherent risk of performance decay, known as “model drift,” after these devices are deployed in clinical environments. The challenge for the FDA is to synthesize these divergent views into a cohesive strategy that balances rapid innovation with the paramount need for patient safety.

Industry’s Stance: Leverage Existing Rules

Medical technology companies and their powerful lobbying organizations, including AdvaMed and the Medical Device Manufacturers Association (MDMA), have presented a unified front against the creation of new, universal postmarket monitoring requirements for all AI-enabled devices. Their central argument is that such prescriptive regulations would be duplicative of existing structures, creating unnecessary bureaucratic hurdles that could inadvertently stifle innovation and even compromise patient safety. Instead, the industry overwhelmingly advocates for the FDA to utilize its current regulatory frameworks, most notably the Quality Management System Regulations (QMS). Proponents contend that the QMS already provides a robust and sufficiently flexible mechanism for overseeing design validation, risk management, and postmarket surveillance, making a separate set of AI-specific rules redundant. This position emphasizes trust in established systems to ensure the continued safety and performance of AI technologies without imposing a new layer of mandates.

Further refining their position, industry stakeholders have championed a risk-based methodology, firmly rejecting any “one-size-fits-all” policy for postmarket monitoring. This principle posits that the intensity and nature of oversight should be directly proportional to the risk a specific device poses to patients. The MDMA introduced a nuanced distinction between different types of AI models to support this view. It argued that “locked” AI models, which are static and do not change autonomously after deployment, present a lower risk profile and may not require any special monitoring beyond standard controls. In stark contrast, they acknowledged that “continual machine learning models,” which autonomously update based on new data, introduce far greater complexity and potential for unforeseen risks. For these more dynamic, adaptive systems, the industry concedes that specific, targeted monitoring mechanisms may be appropriate, reflecting a willingness to accept tailored oversight for higher-risk technologies while preserving flexibility for more stable ones.

Healthcare Providers: A Call for Manufacturer Accountability

Hospitals and professional medical groups, represented by organizations like the American Hospital Association (AHA), concur that postmarket monitoring of AI devices is crucial for patient safety. However, they firmly assert that this responsibility should fall squarely on the shoulders of the device manufacturers, not the clinicians or healthcare facilities that use the tools. This stance is rooted in the practical realities of the healthcare system, where many hospitals—particularly smaller, rural, and critical access facilities—lack the specialized staff, financial resources, and deep technical expertise required for robust AI governance and ongoing performance monitoring. The providers argue that placing this burden on them would be both impractical and inequitable, potentially creating disparities in patient safety based on a hospital’s size or location. They see manufacturers, as the creators of the technology, as the only party equipped to handle this complex task.

This call for manufacturer accountability is further bolstered by the “black box” problem inherent in many sophisticated AI systems. The AHA highlighted the opaque nature of these algorithms, which often makes it exceedingly difficult for end-users to independently identify underlying flaws, biases, or performance degradation in an AI model’s analyses and recommendations. To address this, provider groups offered several concrete proposals for the FDA. They recommended updating adverse event reporting metrics to specifically capture AI-related risks such as algorithmic bias, “hallucinations” (confident but false outputs), and model drift. Furthermore, they suggested that the FDA should impose risk-based monitoring requirements directly on manufacturers, with oversight ranging from periodic revalidation for lower-risk devices to continuous surveillance for higher-risk ones. The consensus from the clinical front line is clear: accountability must rest with those who design and profit from the technology.

The Patient Perspective: Demanding Transparency and Human-Centered Metrics

The feedback from patients and their advocates introduced a critical, human-centered dimension to the regulatory discussion, shifting the focus toward transparency and the need for performance metrics that reflect the real-world patient experience. Advocates like Andrea Downing of the Light Collective argued that the negative impacts of an AI device’s failure or malfunction are often invisible in traditional clinical reporting. They called for evaluations to include measures of “patient burden,” a concept that captures the tangible and intangible costs to individuals. This includes tracking the need for extra medical appointments or additional diagnostic tests, delays in receiving appropriate care, the confusion that arises when an AI-driven care plan changes inexplicably, and the significant emotional or mental distress that occurs when an automated output contradicts a patient’s own lived experience and bodily knowledge.

This push for a more holistic evaluation framework was encapsulated by the powerful sentiment that FDA approval should not be seen as the conclusion of oversight but rather as the beginning of a continuous commitment to safety. Healthcare AI strategist Dan Noyes, drawing from personal experience, underscored the urgent need for full transparency, including clear disclosures to patients about when and how AI tools are being used in their care decisions. He and other advocates insisted on notifications about when AI models are updated or changed and, crucially, demanded rigorous testing of AI systems across diverse patient populations to ensure equitable performance and mitigate hidden biases. The collective patient voice reframed the regulatory process, suggesting that approval was not a finish line but a starting line, marking the point where the true responsibility to monitor a device’s real-world impact began.