The immense pressure on modern healthcare systems, driven by workforce shortages and the complexities of value-based care, has created a critical need for solutions that move beyond simple automation. While initial forays into artificial intelligence offered tools like chatbots and basic data processors, the next frontier involves creating AI systems that can collaborate, learn, and reason in ways that mirror the complex cognitive workflows of experienced medical professionals. The challenge is not merely to build an algorithm that can identify a pattern but to develop a digital colleague capable of understanding context, adapting to new information, and augmenting the nuanced judgment of a clinical team. This evolution is giving rise to multi-agent AI systems—teams of specialized AI agents enhanced with large language models, self-reflection capabilities, and sophisticated tools—designed to tackle intricate healthcare processes, reduce manual burdens, and ultimately improve care coordination and alignment with patient preferences. The goal is to transform clinical decision support from a static reference tool into a dynamic, learning partner in patient care.

From Static Models to Dynamic Learning

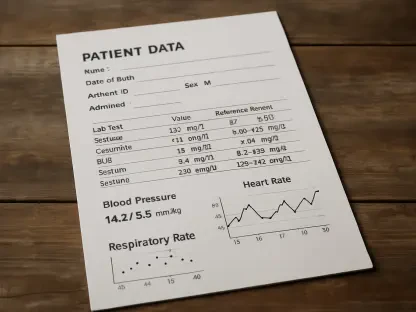

A compelling real-world application of this advanced approach comes from BJC Healthcare, which grappled with the sensitive and complex process of end-of-life and advance care planning. The organization’s initial system utilized a deep learning model to generate mortality risk scores, identifying patients who might benefit from these crucial conversations. However, the model’s output was just the starting point. A dedicated team of retired clinicians then undertook the laborious task of manually reviewing each high-risk patient’s chart to determine if outreach was truly appropriate. While this human-led review process was highly accurate, it presented significant operational challenges. It was inherently slow, resource-intensive, and, most importantly, static. The collective wisdom of the reviewers was not systematically captured or integrated back into the model, meaning the system itself never learned from their expert decisions. This created a bottleneck that limited the program’s scalability and prevented the continuous improvement necessary to adapt to evolving clinical insights and patient populations, highlighting a fundamental limitation of traditional AI in high-stakes medical environments.

To overcome these limitations, the organization developed a “learning reviewer agent,” a sophisticated component of a new multi-agent system designed to transform the entire workflow. This agent was not built in a vacuum; it was trained on a rich dataset of over 35,000 patient cases, which included the original risk scores, comprehensive clinical notes, and, crucially, the final decisions made by the human reviewers. By applying supervised learning techniques to this historical data, the agent learned to mimic the nuanced decision-making patterns of experienced clinicians. More importantly, its development did not stop there. The system was designed to incorporate live reinforcement learning, creating a feedback loop where ongoing input from practicing clinicians continuously refines the agent’s performance. This dynamic approach allows the AI to move beyond simply replicating past decisions and begin to enhance them, progressively improving its accuracy and adapting its recommendations based on real-time clinical validation, effectively becoming a constantly evolving repository of expert judgment.

A Blueprint for Institutional Memory

The implementation of this learning agent represented more than just an algorithmic upgrade; it pioneered a model for creating “memory-augmenting” systems that build and retain institutional knowledge. Unlike static AI models that require periodic and cumbersome retraining, these agentic systems are architected for continuous learning. They store and reuse insights gained during their deployment, allowing them to become increasingly attuned to local practice patterns and the subtle, unwritten rules that often guide expert clinical decisions. This capability was made possible through a structured and phased framework that could serve as a blueprint for other healthcare organizations. The process involved meticulously defining the roles of different AI agents within the system, establishing robust feedback loops for clinician input, and executing a cautious evaluation process. This included extensive testing on historical data, followed by a “silent mode” where the agent’s recommendations were generated and validated against human decisions without impacting live patient care. Only after proving its safety and efficacy was the system fully deployed with ongoing post-deployment monitoring. This methodical approach provided a roadmap for integrating advanced, learning AI into high-stakes domains like chronic disease management, demonstrating that success depended as much on the implementation strategy as on the technology itself.