As artificial intelligence continues to transform the landscape of healthcare, the U.S. Food and Drug Administration (FDA) has taken a significant step toward ensuring the safety and effectiveness of AI-enabled medical devices by initiating a public consultation process. This move comes in response to growing concerns about how these innovative tools perform in real-world clinical settings over time, especially when compared to traditional medical equipment. Unlike conventional devices with predictable and stable functionality, AI systems can experience performance shifts due to a variety of dynamic factors. The FDA’s request for feedback, which opened recently and will close on December 1, underscores a commitment to addressing these unique challenges. By engaging with stakeholders, the agency aims to develop robust strategies for ongoing evaluation, ensuring that these cutting-edge technologies deliver consistent benefits without compromising patient safety in an ever-evolving medical environment.

Addressing the Challenge of Data Drift

The phenomenon of data drift poses a substantial hurdle in the deployment of AI-enabled medical devices, as it can lead to a gradual decline in performance that may go unnoticed without proper oversight. Data drift occurs when changes in external factors—such as patient demographics, clinical practices, or data inputs—cause an AI system to deviate from its original accuracy and reliability. Unlike static medical tools, these systems are inherently adaptive, which means their behavior can shift in unpredictable ways after initial approval. The FDA has highlighted that traditional regulatory frameworks, designed for more predictable devices, fall short in anticipating or managing such variability. This gap necessitates a fresh approach to monitoring, one that can detect subtle changes and prevent potential risks to patients. As AI becomes more integrated into diagnostics and treatment, understanding and mitigating data drift is critical to maintaining trust in these technologies and ensuring they continue to meet stringent safety standards in diverse healthcare settings.

Beyond the technical aspects of data drift, the FDA is keenly aware of the broader implications for patient outcomes and healthcare equity. Performance degradation in AI systems can disproportionately affect certain populations if not addressed, potentially introducing biases that skew results or limit access to effective care. For instance, shifts in user behavior or evolving clinical guidelines might alter how a device interprets data, leading to inconsistent or inaccurate outputs. The agency is therefore seeking input on how to identify early warning signs of such issues and implement corrective measures swiftly. This includes exploring real-world evidence from clinical environments to better understand how these devices function outside controlled testing scenarios. By focusing on scalable solutions, the FDA hopes to establish monitoring mechanisms that can adapt to the complexities of AI systems, ensuring that performance remains reliable across varied contexts and that patient safety is never compromised by unforeseen changes in device behavior.

Developing Effective Post-Market Monitoring Strategies

One of the core focuses of the FDA’s consultation is the establishment of practical and systematic post-market monitoring strategies for AI-enabled medical devices to ensure their long-term efficacy. The agency recognizes that pre-market evaluations, while essential, cannot fully predict how these technologies will perform once deployed in dynamic clinical settings. As a result, there is a pressing need for continuous assessment that can capture real-world data and identify performance issues as they arise. The FDA is particularly interested in existing methods used by healthcare providers and developers to track device functionality over time. This includes gathering insights on performance metrics and evaluation techniques that can be scaled across different institutions. By fostering a dialogue with industry stakeholders, the agency aims to build a framework that balances the rapid pace of innovation with the imperative to protect public health, ensuring that AI tools remain both cutting-edge and dependable.

Another critical aspect of post-market monitoring involves understanding human-AI interactions and their impact on device performance over extended periods. The way clinicians and patients engage with these systems can significantly influence outcomes, sometimes in ways that are difficult to anticipate during initial testing phases. The FDA is exploring how to define triggers that would prompt further evaluation or intervention, such as unexpected changes in data outputs or user feedback indicating potential issues. Additionally, the agency seeks to identify best practices for data collection after deployment, ensuring that the information gathered is both comprehensive and actionable. This approach reflects a shift toward adaptive regulatory models that prioritize ongoing vigilance over static approvals. By integrating real-world evidence into monitoring plans, the FDA aims to create a responsive system capable of addressing emerging risks while supporting the continued advancement of AI in medical applications.

Shaping Future Regulatory Frameworks

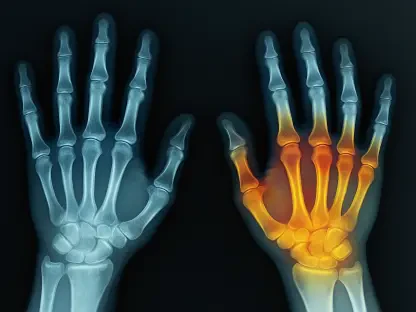

The FDA’s initiative marks a pivotal moment in the evolution of regulatory oversight for AI technologies within the healthcare sector, emphasizing the need for frameworks that can evolve alongside innovation. Current guidelines, often tailored to traditional devices, lack the flexibility required to manage the unique characteristics of AI systems, which can change behavior based on new data or usage patterns. Through this consultation, the agency is gathering perspectives on how to design policies that accommodate these dynamic traits without stifling technological progress. Key areas of inquiry include defining appropriate performance metrics and establishing standardized methods for real-world evaluation. The goal is to create a regulatory environment that not only ensures safety and effectiveness but also fosters confidence among healthcare providers and patients, paving the way for broader adoption of AI solutions in medical practice.

Equally important is the emphasis on collaboration with external stakeholders to refine these future frameworks and address potential gaps in current practices. The FDA is seeking actionable insights from developers, clinicians, and other experts who interact with AI devices daily, aiming to incorporate their experiences into regulatory strategies. This includes identifying specific challenges, such as managing performance variability across diverse patient populations or healthcare infrastructures. The agency also wants to explore how to mitigate risks associated with data drift through proactive measures rather than reactive fixes. By synthesizing this feedback, the FDA intends to develop a cohesive set of guidelines that can be updated as technology advances, ensuring that oversight remains relevant and effective. This forward-thinking approach highlights a commitment to balancing the transformative potential of AI with the fundamental need to safeguard patient well-being in an increasingly digital healthcare landscape.

Building a Safer Path Forward

Reflecting on the consultation process, the FDA has demonstrated a proactive stance in tackling the complexities of AI-enabled medical devices by engaging a wide range of voices to inform its strategies. The discussions held provided invaluable insights into the real-world challenges of data drift and performance variability, laying the groundwork for more robust monitoring practices. Moving ahead, the agency plans to synthesize this feedback into actionable policies that prioritize continuous evaluation and risk mitigation. Stakeholders are encouraged to stay involved in shaping these evolving frameworks, ensuring that the regulatory landscape adapts to technological advancements. The emphasis remains on fostering innovation while upholding the highest standards of safety, with a clear focus on integrating real-world evidence into future guidelines. This collaborative effort aims to establish a safer, more reliable path for AI in healthcare, addressing potential pitfalls before they impact patient care.