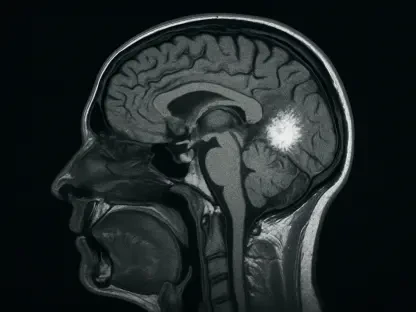

In the rapidly advancing landscape of healthcare technology, artificial intelligence (AI) is ushering in a new era of medical devices with the potential to revolutionize diagnostics and patient care, but a sobering study published in JAMA Health Forum on August 22, 2025, casts a critical light on the risks tied to these innovations. Led by Tinglong Dai of Johns Hopkins Carey Business School, the research scrutinized 950 AI-enabled medical devices approved by the U.S. Food and Drug Administration (FDA) through November 2024, revealing a disturbing trend: devices lacking clinical validation are far more prone to recalls. With 60 devices linked to 182 recall events—often due to diagnostic errors or functionality failures—and 43% of these recalls occurring within a year of approval, the findings signal significant gaps in pre-market evaluation. This alarming reality raises urgent questions about the safety of AI tools in clinical environments and the adequacy of current regulatory frameworks to protect patients from potential harm.

Critical Risks of Insufficient Validation

Recall Rates and Validation Gaps

The connection between inadequate clinical validation and elevated recall rates for AI medical devices emerges as a central concern in the recent study. Among the 950 devices analyzed, those without thorough testing through clinical trials or retrospective studies were significantly more likely to face recalls. Common issues triggering these recalls include diagnostic inaccuracies and delays or complete losses in device functionality. Such failures not only disrupt healthcare delivery but also pose direct risks to patient well-being. The data reveals that a substantial portion of these devices, approved without robust evidence of real-world performance, falter soon after deployment. This pattern underscores a critical flaw in the development pipeline, where the rush to innovate may overshadow the imperative to ensure reliability. As AI continues to integrate into medical practice, the absence of validation stands out as a preventable yet pervasive risk factor that demands immediate attention from developers and regulators alike.

Delving deeper into the recall statistics, the rapid onset of issues post-approval paints a stark picture of the validation gap’s consequences. A striking 43% of recall events occurred within just one year of FDA authorization, suggesting that many AI devices are not adequately tested for the complexities of clinical settings before reaching the market. These early failures often stem from errors in diagnostic algorithms or hardware malfunctions that could have been identified through rigorous pre-market testing. The implications extend beyond mere technical setbacks, as each recall represents potential harm to patients who rely on these tools for critical health decisions. This urgency highlights the need for a shift in how validation is prioritized, ensuring that AI medical devices are not only innovative but also dependable from the moment they are introduced into healthcare systems.

Regulatory Pathways and Their Shortcomings

A significant contributor to the recall risks of AI medical devices lies in the regulatory pathways that govern their approval, particularly the FDA’s 510(k) clearance process. This pathway allows many devices to gain market entry by demonstrating substantial equivalence to existing products, often bypassing the requirement for clinical trials. While designed to expedite access to new technologies, this leniency has a downside: devices approved through this route frequently lack the empirical data needed to confirm their safety and efficacy. The study indicates that a majority of recalled AI devices were cleared via this pathway, exposing a systemic vulnerability. Without mandatory human testing or comprehensive studies, these tools are released into clinical environments where undetected flaws can lead to serious consequences, revealing a gap between regulatory intent and real-world outcomes.

Further examination of the 510(k) pathway’s impact shows how its structure can inadvertently prioritize speed over safety. The absence of stringent clinical validation requirements means that many AI devices enter the market with untested assumptions about their performance under diverse patient conditions. When these assumptions fail, as evidenced by the high rate of early recalls, the burden falls on healthcare providers and patients to navigate the fallout. This regulatory shortfall not only undermines confidence in AI technologies but also raises ethical concerns about deploying unproven tools in life-critical settings. The findings suggest that while the 510(k) process may facilitate innovation, it often does so at the expense of thorough vetting, necessitating a reevaluation of how approval criteria balance efficiency with the imperative to protect public health.

Corporate Disparities in Device Safety

Public vs. Private Company Recall Trends

A striking disparity in recall rates between publicly traded and private companies emerges as a key insight from the study, shedding light on differing approaches to device safety. Public companies, which account for roughly 53% of AI medical devices on the market, were responsible for over 90% of recall events and nearly 99% of recalled units. This disproportionate burden suggests systemic differences in how these entities prioritize validation. While private firms showed a higher tendency to conduct clinical testing, with only 40% of their recalled devices lacking validation, a staggering 78% of recalled devices from public companies had no such testing. This gap points to potential influences like shareholder expectations or competitive pressures that may drive public firms to expedite market entry, often at the cost of rigorous safety checks, highlighting a critical area for industry scrutiny.

Exploring the reasons behind this trend reveals deeper structural issues within publicly traded companies that impact device safety. The nearly sixfold higher likelihood of recall events among public firms compared to their private counterparts suggests that financial targets and market demands could be overshadowing the commitment to thorough validation. Larger public entities, in particular, demonstrated lower rates of clinical testing, possibly due to resources being diverted to scaling production or meeting investor timelines rather than ensuring device reliability. This dynamic creates a risky precedent where innovation is rushed, potentially compromising patient outcomes. Addressing this disparity requires not only regulatory intervention but also a cultural shift within public companies to align their priorities with long-term safety over short-term gains, ensuring that the pursuit of profit does not undermine the integrity of medical technology.

Systemic Pressures and Accountability Challenges

The systemic pressures faced by publicly traded companies further complicate the landscape of AI medical device safety. Unlike private firms, which may have more flexibility to delay market entry for additional testing, public companies often operate under intense scrutiny from investors and competitors, pushing for rapid deployment of new technologies. This environment can lead to shortcuts in the validation process, as seen in the high percentage of unvalidated recalled devices from these firms. The study’s findings indicate that such pressures result in a tangible impact on healthcare, with frequent recalls disrupting clinical workflows and eroding trust in AI tools. Tackling this issue demands a nuanced approach that considers how corporate governance and market dynamics influence safety practices, urging a reevaluation of accountability measures for public entities in the medical technology sector.

Beyond immediate pressures, the accountability challenges for public companies highlight a broader need for industry-wide standards that enforce validation regardless of corporate structure. The disparity in recall outcomes suggests that without external mandates, public firms may continue to deprioritize clinical testing in favor of financial objectives. This trend poses a significant risk to patient safety, as the majority of recalled units stem from these entities, affecting a wide range of medical applications. Strengthening accountability could involve tying executive incentives to safety metrics or imposing stricter penalties for non-compliance with validation protocols. By addressing these systemic issues, the industry can work toward a future where corporate status does not dictate the reliability of AI medical devices, ensuring that all patients benefit from safe and effective technological advancements.

Implications for Patient Safety and Policy

Urgent Need for Enhanced Oversight

The rapid occurrence of recalls—often within a year of FDA approval—casts a troubling shadow over patient safety and the reliability of AI medical devices. Each recall event, frequently tied to diagnostic errors or functionality losses, represents a potential threat to individuals relying on these tools for critical health decisions. The study’s revelation that many of these devices lack clinical validation prior to market entry amplifies concerns about their real-world performance. Beyond immediate risks, frequent recalls can erode public trust in AI-driven healthcare solutions, hindering the adoption of potentially life-saving innovations. This situation calls for enhanced oversight mechanisms that prioritize patient well-being, ensuring that devices are not only cutting-edge but also dependable. Stronger pre-market testing and continuous post-market monitoring stand out as essential steps to mitigate these risks and safeguard trust in medical technology.

Addressing the oversight gap requires actionable strategies to bolster device safety from development through deployment. Proposals from the study include mandating clinical trials before approval and incentivizing companies to collect real-world performance data after market entry. Such measures would help identify potential issues before they escalate into recalls, protecting patients from harm. Additionally, fostering transparency in validation processes could empower healthcare providers to make informed choices about the tools they use. The urgency of these reforms is underscored by the sheer volume of recalls linked to unvalidated devices, which signal a pressing need to shift from reactive responses to proactive prevention. By embedding rigorous testing into the lifecycle of AI medical devices, the industry can better align innovation with the fundamental goal of ensuring safe, effective care for all patients.

Calls for Regulatory Reform

Criticism of the current FDA approval processes, particularly the 510(k) clearance pathway, forms a pivotal part of the discourse on improving AI medical device safety. This pathway, while efficient in bringing new technologies to market, often allows devices to bypass comprehensive clinical validation, contributing to the high recall rates observed. The study advocates for reforms that would require human testing or clinical trials as a standard prerequisite for approval, addressing the root causes of post-market failures. Additionally, suggestions include policies where clearances could be revoked after a set period—potentially five years—if no validation data is provided, adding a layer of accountability. These proposed changes aim to close existing loopholes, ensuring that only thoroughly vetted devices reach clinical settings, thereby reducing the likelihood of safety issues.

The push for regulatory reform also reflects a growing consensus on the need to balance innovation with stringent safety standards. The FDA’s draft guidances from recent years on enhancing the 510(k) program, though not yet finalized, indicate an awareness of these challenges, yet the pace of change remains a concern. Implementing mandatory validation requirements could serve as a deterrent to premature market releases, compelling manufacturers to invest in robust testing. Moreover, ongoing post-market surveillance could provide critical insights into device performance, enabling timely interventions before issues escalate. Reflecting on past recall trends, it becomes evident that without decisive regulatory action, the risks associated with unvalidated AI devices persist as a significant threat to healthcare quality, underscoring the importance of evolving policies to meet the demands of modern medical technology.