The promise of artificial intelligence in radiology is immense, but many healthcare organizations are discovering a frustrating gap between an AI model’s advertised performance and its actual effectiveness in their own clinical environment. This “performance drift” happens because an algorithm validated in a controlled setting often struggles when faced with the unique complexities of a real-world workflow. The fundamental question for any clinical leader is shifting from the general “Does this AI work?” to the far more critical and practical question, “Does it work here?”

The Universal AI Myth Why One Size Fits All Fails

The Problem of Local Variability

The core assumption that a single, universally trained artificial intelligence model can be effectively deployed across diverse healthcare organizations is proving to be a significant fallacy in modern radiology. Health IT teams and clinical leaders are frequently presented with AI models that vendors claim “work everywhere,” a premise based on the flawed idea that an algorithm’s performance is an inherent, portable quality. However, an AI model’s efficacy is intrinsically tied to the specific context in which it operates. Regulatory clearance, while providing a baseline of confidence and safety, does not and cannot guarantee consistent performance across the wide spectrum of real-world clinical settings. The reality is that every healthcare facility possesses a unique data fingerprint, shaped by countless local factors. This variability is not a minor detail; it is the central challenge that determines whether an AI tool becomes a valuable asset or an expensive, unreliable liability that fails to meet clinical expectations.

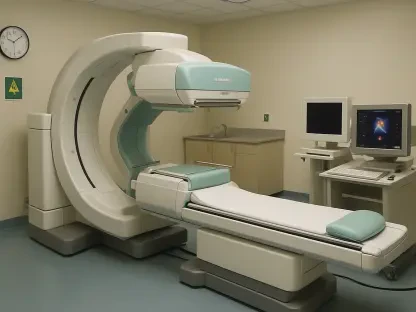

This inherent variability in healthcare practices is the primary reason why universal, or “frozen,” models fail to perform consistently from one institution to another. The differences manifest in tangible variations across multiple factors that directly alter the underlying data the AI processes. For instance, image acquisition protocols differ significantly; radiologists trained at various prestigious institutions are taught distinct methods for scanning patients. Furthermore, the make, model, and calibration of imaging hardware like MRI and CT scanners vary between sites. Patient populations also introduce another layer of complexity, as demographic and clinical characteristics can differ, influencing the prevalence and presentation of certain conditions. Finally, clinical workflows and staffing patterns create a unique operational context. These variations result in different pixel distributions and data characteristics, meaning an AI model “frozen” after being trained on data from one specific environment is ill-equipped to handle these nuances, leading to a degradation in its accuracy and reliability when deployed elsewhere.

Frozen Models in a Dynamic World

Most commercially available AI tools are developed as “frozen models,” meaning their underlying algorithms are static and do not adapt or learn after their initial training phase is complete. This rigidity represents their greatest weakness when deployed into the fluid and heterogeneous environments of clinical practice. The static nature of these models is fundamentally at odds with the dynamic and non-uniform reality of healthcare delivery. When a frozen model, trained on a specific dataset from one source, encounters images with different pixel distributions or data characteristics resulting from local protocols at a new facility, its performance can degrade significantly. This mismatch between a fixed algorithm and a variable real-world application is the principal cause for the performance drift that so many institutions experience. This drift not only undermines the potential for the AI to improve efficiency and accuracy but also erodes the clinical trust that is essential for its successful adoption and integration into daily workflows.

The consequences of this performance degradation are far-reaching and can actively hinder the very processes the technology was meant to improve. An AI that is not finely tuned to its operational environment can generate an unacceptable rate of false positives or, more dangerously, miss critical findings, leading to diagnostic errors. Instead of streamlining workflows, it creates additional work, forcing radiologists to constantly second-guess the algorithm’s outputs and manually verify its suggestions. This negates the intended benefits of speed and support, turning a promising technological aid into a source of clinical friction. The problem lies not in the theoretical capability of artificial intelligence but in the practical inability of a single, static model to generalize its performance across the vast and varied landscape of modern healthcare institutions. This failure to adapt makes the investment in such technology questionable, as the return on investment diminishes with every inaccurate or unreliable result produced.

A New Paradigm The AI Foundry for Localized Solutions

Building for a Market of One

In response to the shortcomings of one-size-fits-all AI, an innovative business model described as an “AI Foundry” is emerging as a powerful alternative. This approach represents a paradigm shift away from selling a fixed, off-the-shelf application. Instead, it provides a platform and the necessary components for healthcare organizations to build or fine-tune AI models that are precisely tailored to their unique circumstances. This model empowers a radiology team to leverage its own local data, which inherently reflects its specific patient population, imaging techniques, and scanner configurations. By training or adjusting an algorithm on this data, the organization can ensure that the AI’s behavior is consistent with its established clinical and operational protocols. This process creates a bespoke solution designed for a “market of one,” where the technology is designed not just to work in general, but to solve a specific problem for a single, unique client, thereby maximizing its relevance, accuracy, and value within that distinct environment.

This customized approach transforms artificial intelligence from a rigid, external tool into a deeply integrated and adaptable component of a hospital’s diagnostic arsenal. The “market of one” philosophy allows an institution to go beyond simple implementation and truly align the AI with its strategic goals. For example, a facility can calibrate a model’s sensitivity and specificity to match its specific tolerance for risk and its clinical priorities, something a generic product cannot offer. If a practice prioritizes never missing a certain critical finding, it can fine-tune the algorithm for maximum sensitivity, even at the cost of more false positives. Conversely, a high-volume screening center might optimize for specificity to reduce unnecessary follow-ups. This level of customization ensures the AI functions as a true partner to the clinical team, addressing their specific pain points and enhancing their workflow in a way that a universally designed product simply cannot achieve.

Unlocking Operational and Financial Value

The most compelling and immediate use cases for this localized AI approach are often found not in purely diagnostic applications but in solving critical operational and financial challenges. A powerful example illustrates this point perfectly. One radiology practice was grappling with the high cost of outsourcing studies to a teleradiology service for overnight reads, an expense that frequently exceeded the reimbursement for those very exams. This created a significant financial drain and an operational bottleneck that demanded a more efficient solution. A generic AI designed for broad diagnostic purposes would not have been able to address this highly specific business problem. The practice needed a tool that was built to solve their unique workflow inefficiency, highlighting the immense value that can be unlocked when AI is applied to practical, business-oriented issues rather than being confined to general clinical detection tasks alone.

Using the AI Foundry model, the practice developed a highly specialized, narrow AI model with a single, clear function: to triage overnight studies and identify only the critical findings that required an immediate read by the expensive external service. All non-critical studies could safely wait for in-house radiologists to review them the following morning. This custom-built solution directly addressed the pressing financial and operational problem, resulting in a significant and immediate reduction in operating costs. While such a specific model might not be a broadly marketable product suitable for every hospital, its value was immense because it solved a crucial problem for that particular “market of one.” This demonstrates that the true power of AI in the near term may lie in its ability to be adapted to deliver tangible returns on investment by optimizing workflows, cutting costs, and solving the specific business challenges that healthcare organizations face daily.