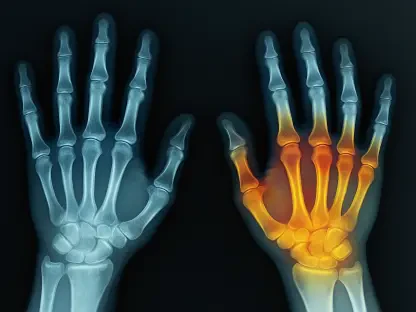

Breast cancer is a leading health concern globally, prompting extensive research to improve diagnostic methods, especially given the limitations of traditional imaging techniques like mammography and ultrasound. Although mammography and ultrasound play significant roles in breast cancer detection, their effectiveness can be hampered by various factors, such as dense breast tissue and microcalcification detection challenges. Additionally, discordance between the Breast Imaging Reporting and Data System (BI-RADS) classifications from these modalities often results in unnecessary anxiety and biopsies, further complicating diagnostic efforts. These limitations underscore the necessity for advanced solutions capable of providing more accurate diagnoses, paving the way for the integration of artificial intelligence in medical imaging. AI shows promise in enhancing the accuracy of breast cancer diagnostics, particularly through deep learning platforms that merge data from multiple imaging sources, offering a more comprehensive analysis of breast lesions.

1. Exploring Diagnostic Challenges

Mammography, the standard method recommended for breast cancer screening, unfortunately faces limitations when used on women with dense breast tissue because such tissue can obscure detection efforts. While ultrasound serves as a supplementary tool for those with dense breast tissue, it falls short in identifying microcalcifications, a critical early indicator of breast cancer. Despite magnetic resonance imaging’s (MRI) higher effectiveness in detecting early breast cancer, it remains limited to high-risk individuals due to significant costs and lengthy examination times. Combining mammography and ultrasound aims to address these shortcomings, particularly in detecting cancer in women with dense breast tissue, but the inevitable discordance in BI-RADS classifications can lead to unnecessary biopsies and anxiety. Prior methodologies such as shear wave elastography and contrast-enhanced ultrasound showed potential in resolving discordant BI-RADS cases but faced criticism due to high operator variability and inconsistent diagnostic criteria. These challenges highlight the urgent need for a robust, objective approach that combines mammography and ultrasound, providing consistent and accurate results to assist clinicians in making informed decisions.

2. Leveraging Deep Learning Technologies

Recent advancements in medical imaging technologies have brought deep learning to the forefront, showcasing promising capabilities in breast cancer detection and management. Notably, this offers an opportunity to extract multimodal radiomics features, circumventing the limitations of unimodal imaging by providing comprehensive insights into breast lesions. Studies suggest that artificial intelligence integrated with radiologists can improve diagnostic accuracy, particularly benefiting novice radiologists by offering expert-level guidance in clinical decision-making processes. However, developing effective strategies to collaboratively utilize deep learning models with radiologists of varying expertise levels remains largely unexplored, particularly regarding discordant mammography and ultrasound BI-RADS classifications. Radiologists’ perceptions of deep learning outputs significantly influence its clinical applicability, underscoring the need to align output interpretations with clinical expectations to foster confidence in AI-assisted workflows. Therefore, exploring the potential for deploying deep learning networks that integrate mammography and ultrasound is imperative to advance diagnostic accuracy and clinician confidence.

3. Integrating Machine Learning Models

The advent of a DL-UM network, which integrates ultrasound and mammography images, marks a significant milestone toward addressing the challenges associated with discordant BI-RADS classifications. This model, designed to harness the complementary features of ultrasound and mammography, aims to assist in enhancing breast lesion diagnosis accuracy and management efficacy when used alongside radiologists. Its evaluation involved a retrospective collection of imaging data from women diagnosed with breast lesions undergoing both imaging methods, classifying them into concordant and discordant BI-RADS categories. The DL-UM network’s performance surpassed standalone models utilizing ultrasound or mammography, exhibiting improvements in both sensitivity and specificity. Additionally, the collaboration with radiologists yielded significant enhancements in diagnostic accuracy and management outcomes, reducing unnecessary biopsies, especially benefiting junior radiologists. This emphasizes the DL-UM network’s potential as a reliable clinical tool that radiologists can incorporate, resulting in better intermodality agreement and instilling greater confidence in AI assistance within clinical workflows.

4. Enhancing Radiologists’ Confidence

Incorporating advanced AI models into clinical workflows not only enhances diagnostic accuracy but also impacts radiologists’ trust in AI-assisted tools. Among clinical settings, radiologist feedback and adoption play pivotal roles in AI integration success. In scenarios involving discordant BI-RADS classifications, the DL-UM network’s explainable outputs through heatmaps proved critical in building confidence among radiologists, highlighting lesion boundaries or identifying suspicious regions, aligning closely with traditional diagnostic approaches. Such tools minimize subjective interpretation biases, respecting the diagnostic expertise of radiologists while providing supplementary insights. By fostering an atmosphere of trust and reliability, AI-assisted diagnoses can seamlessly integrate into daily practices, supporting both experienced and junior radiologists in efficient and accurate clinical decision-making. However, achieving optimal confidence in AI diagnostics, particularly under circumstances of discrepancy in mammography and ultrasound classifications, requires further investigation and refinement. This calls for continuous dialogue and feedback between radiologists and technology developers to align AI tools with real-world diagnostic needs.

5. Moving Forward with AI-Assisted Diagnostics

A successful integration of AI-assisted diagnostic tools involves acknowledging limitations and addressing ethical concerns inherent within medical imaging and artificial intelligence. While the DL-UM network shows potential in enhancing breast cancer diagnosis and management by mitigating unnecessary biopsies, stakeholders must prioritize continuous model improvement to ensure robust performance across diverse clinical settings. Ongoing training with anonymized data can also enhance model adaptability and address cultural biases, positioning AI as a universally applicable tool in medical imaging. Alongside technical advancements, prospective multicenter trials with expansive cohorts remain critical to evaluating AI’s generalizability and effectiveness across variable radiologist expertise levels. Collaborations between radiologists, data scientists, and ethicists play fundamental roles in advancing AI technology within clinical practice, promoting trust, accuracy, and consistency in patient care. Future innovations should aim for a seamless fusion of technological and human expertise, aligning clinical workflows with data-driven insights while prioritizing patient outcomes and safety.

Conclusion

The integration of artificial intelligence into breast cancer diagnostics represents a promising advancement in medical imaging, addressing the complexities and limitations of traditional techniques. By harnessing the strengths of deep learning and fusion models that combine ultrasound and mammography data, improved accuracy and specificity in diagnosis are achievable, particularly in challenging scenarios involving discordant BI-RADS classifications. Effective collaboration between AI systems and radiologists enhances clinical decision-making, minimizing unnecessary biopsies and fostering confidence in AI assistance. As the field continues to evolve, stakeholders should prioritize model improvement strategies and ethical considerations, ensuring AI tools align with diverse clinical settings and expertise levels. This collaborative effort promises advanced precision healthcare alongside enhanced radiologist workflow efficiency, shaping the future of breast cancer diagnostics.