The ubiquitous glow of smartwatches and the subtle presence of fitness rings have woven the practice of self-quantification into the very fabric of daily life, turning personal biometrics into a common language of wellness. Millions of people now meticulously track everything from their heart rate during a stressful meeting to the quality of their sleep, all driven by a powerful desire to live healthier, more optimized lives through the power of data. Yet, as we voluntarily amass these deeply personal digital dossiers, a critical and increasingly urgent question looms: will this torrent of information ultimately serve our best interests, or will it be weaponized against us by a growing ecosystem of corporate and governmental entities eager to harness its predictive power? The answer to this question will define the future of privacy in an era where our own bodies have become the most valuable source of data.

The Double-Edged Sword of Data Sharing

Unforeseen Dangers and Public Unease

The potential for misuse of fitness data is far from a distant, hypothetical threat; it has already manifested in startling and dangerous ways that expose the inherent vulnerabilities of our connected lives. Seemingly innocuous applications designed for personal improvement have been co-opted for malicious purposes, such as stalkers leveraging the fitness app Strava to precisely track the daily routines and locations of their victims. In a broader and more stunning example of unintended consequences, the aggregated user heatmap data from the same application inadvertently revealed the layouts and patrol routes of secret military bases and spy outposts around the globe. These incidents serve as a stark reminder that the data we share, even for something as benign as tracking a morning run or a bicycle ride, carries the potential for severe security implications that extend far beyond our personal fitness goals, creating a digital breadcrumb trail that can be followed by those with ill intent.

This unsettling reality has cultivated a significant and well-founded sense of public anxiety regarding how personal data is managed and utilized by the companies that collect it. A YouGov poll conducted in March 2025 revealed that nearly half of the public, a substantial 48 percent, is worried about corporations using data harvested from their devices to uncover and analyze intimate details about their private lifestyles. At the same time, research from the Boston Consulting Group paints a more nuanced picture of public sentiment, indicating that while approximately two-thirds of people are comfortable with their health information being used to generate profits, this acceptance is heavily conditional. A mere 6 percent of respondents approve of such practices happening without any strings attached, a figure that underscores a clear and resounding public demand for greater transparency, meaningful user control, and a more equitable share in the benefits derived from their own biological information.

The Promise and Peril in Public Health

Governments worldwide are increasingly looking to harness the power of wearable technology to revolutionize public healthcare, envisioning a future where personal data streams enable a more proactive and efficient system. The UK’s National Health Service (NHS), for instance, has outlined a 10 Year Health Plan that prominently features the use of wearable tech to establish “virtual wards.” This innovative concept would allow patients to manage chronic health conditions from the comfort of their own homes while being remotely monitored by healthcare professionals. Proponents, such as Pritesh Mistry of The King’s Fund think tank, suggest that this data-driven approach could fundamentally pivot the NHS from a reactive model that treats illness to a preventative one that actively identifies and supports individuals who are already engaged in managing their own health, thereby fostering a healthier population and reducing the strain on clinical resources.

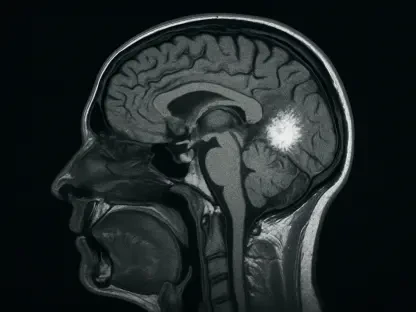

However, this ambitious and forward-thinking vision of a data-integrated healthcare system is fraught with significant risks and ethical complexities that cannot be ignored. Experts like Mistry have raised serious concerns about patient choice, as some individuals may not wish to participate in constant monitoring, and more critically, about the potential for “digital exclusion.” This new form of inequality could leave entire segments of the population behind, particularly those who are unable to afford the necessary devices or who lack the digital literacy required to use them effectively. The medical community has also expressed a healthy dose of skepticism. Professor Victoria Tzortziou Brown, representing the Royal College of GPs, notes that while wellness data can motivate patients, it often lacks the precision of medical-grade measurements. Furthermore, general practices are ill-equipped to sift through the sheer volume of data generated, and the consumer devices themselves are prone to errors that could lead to misinterpretation or unnecessary alarm.

The Commercialization Minefield

From Wellness Perks to Data Exploitation

The rapid and widespread commercialization of personal fitness data is fundamentally altering the risk landscape, transforming what was once a personal tool for self-improvement into a valuable asset for corporations. Insurers like Vitality have pioneered a seemingly benign model, offering customers tangible rewards such as discounted premiums and retail vouchers in exchange for meeting step-count goals tracked by their devices. Executives in the insurance technology sector, such as CEO Vlad Shipov, often downplay the associated privacy risks, framing the practice as the simple and harmless collection of non-sensitive activity data. This business model is gaining significant traction, particularly in markets like the UK where long NHS waiting lists are driving a surge in demand for private medical insurance, thereby expanding the customer base for these data-driven wellness programs to a much broader and more diverse demographic than ever before.

In stark contrast to the industry’s sanitized narrative, privacy advocates present a much more cautionary and critical perspective on the commercial use of fitness data. Mariano delli Santi of the Open Rights Group argues forcefully that the moment commercial incentives are introduced into the equation, the entire dynamic of data collection and use changes irrevocably. The most significant danger, he warns, lies not in the raw data itself but in the sophisticated practice of data inference, where algorithms analyze seemingly basic information to deduce far more intimate and sensitive personal details. For example, patterns of compulsive or erratic activity logged by a device could be interpreted by a third party to infer an underlying addiction or mental health condition. In a purely commercial system, there exists a powerful and almost irresistible incentive to exploit such inferred vulnerabilities for financial gain, whether through targeted advertising, risk assessment, or other means that prioritize profit over the individual’s well-being and privacy.

The Specter of Data-Driven Discrimination

The potential for data inference and exploitation paves the way for new, subtle, and insidious forms of systemic discrimination that could have profound societal consequences. Delli Santi outlines a chilling scenario he terms “discrimination by omission,” where an individual who actively chooses not to wear a device or share their personal data is systematically penalized for their assertion of privacy. In this framework, an insurance company, lacking concrete information about a person’s activity levels and health habits, might automatically classify them as a higher risk. This classification would not be based on any evidence of poor health but rather on the absence of data, leading to higher premiums or even denial of coverage. This practice effectively creates a penalty “on the basis of nothing,” compelling individuals into a system of surveillance under the threat of financial or social disadvantage, thereby eroding the very notion of a right to privacy.

Perhaps even more troubling is the potential for what could be called “discrimination by abundance.” In a world where individuals generate vast and continuous streams of personal health data, it becomes remarkably easy for an organization to find a specific data point to justify any decision it wishes to make. An employer looking to deny a promotion or an insurer seeking to increase a premium could sift through a person’s entire wellness history to cherry-pick a single anomalous heart rate reading, a period of poor sleep, or a decline in activity to rationalize their decision. This practice would allow deep-seated prejudice to be cloaked in a veneer of objective, data-driven legitimacy, effectively enabling discriminatory practices with a seemingly irrefutable justification. The overabundance of data, therefore, does not necessarily lead to fairer outcomes but instead creates an environment where any decision, no matter how biased, can be defended by selectively pointing to a piece of information from an individual’s own digital footprint.

A Precarious Digital Balance

The examination of fitness tracker data revealed a landscape fraught with complexity and risk. Its safety was found to be highly questionable and heavily dependent on the context of its use. While the data held clear potential for individual motivation and could theoretically support a more preventative public healthcare system, it was simultaneously exposed as profoundly vulnerable to misuse and commercial exploitation. The investigation concluded that the risks were evolving beyond simple data breaches to include more subtle but systemic dangers. These included the creation of a “digital divide” in healthcare, the steady erosion of personal privacy through sophisticated data inference by commercial entities, and the quiet establishment of new forms of discrimination based on either the lack of available data or the selective interpretation of an abundance of it. The central tension that emerged was between the perceived benefits of a data-rich society and the near-total lack of guarantees that this deeply personal information would be used ethically, especially as the line between personal wellness and corporate profit became increasingly and dangerously blurred.