Responding to a massive and deeply ingrained user trend that has been quietly unfolding for years, OpenAI has officially announced the launch of ChatGPT Health, a specialized AI platform designed to empower individuals in managing their personal health journey. The announcement, dated January 7, 2026, presents the new tool not as a visionary, top-down innovation, but as a direct and inevitable market response to the millions of people who already turn to general AI for a wide spectrum of health-related inquiries. The platform’s central goal is to formalize this existing behavior within a secure and highly specialized environment, helping users feel more informed, prepared, and confident by securely connecting their personal health data with the advanced intelligence of ChatGPT. This initiative marks a significant step in structuring the increasingly common intersection of artificial intelligence and personal health management, aiming to provide a reliable and private space for users to better understand their well-being without replacing the critical role of medical professionals.

A Tool Built on User Behavior

Formalizing an Existing Habit

The genesis of ChatGPT Health was fundamentally driven not by a corporate vision to create a new market, but by the meticulous observation of established human behavior. OpenAI recognized that a vast and diverse user base was consistently employing the standard ChatGPT model for an array of deeply personal health needs, ranging from seeking a “second opinion” on a confusing symptom to using the AI as a private, non-judgmental space to explore sensitive health concerns or even as a quasi-therapeutic confidant. These users, by demonstrating a clear, persistent, and large-scale need, effectively served as the co-architects of this new platform. The launch therefore represents a strategic pivot by OpenAI from passively observing this powerful organic trend to actively building a tailored, structured, and safer solution designed specifically to serve it. This approach underscores a modern product design philosophy where the most successful innovations often act as mirrors, reflecting and refining pre-existing habits rather than attempting to invent entirely new ones from scratch.

This strategic direction highlights the symbiotic relationship between user behavior and product development in the digital age. Rather than pushing an unsolicited technology onto the public, the company responded to a clear pull from the market. The consistent pattern of users uploading medical documents or describing symptoms to a general-purpose AI signaled an unmet demand for accessible and immediate health information processing. The introduction of ChatGPT Health is thus the logical conclusion of a user-led movement. It is the point where a company formalizes a widespread habit, building guardrails, implementing safety protocols, and creating a dedicated experience around a behavior that was already happening at scale. This formalization is critical, as it moves the activity from an unregulated, generalist environment into a purpose-built ecosystem designed with the specific sensitivities and high stakes of personal health information in mind, ultimately aiming to enhance user empowerment while mitigating potential risks.

Core Features and Capabilities

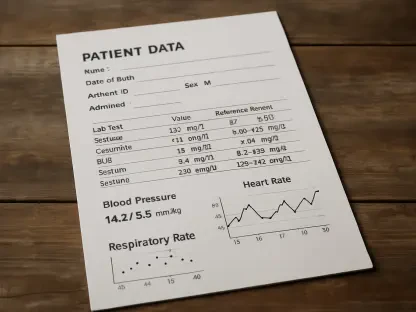

At its core, ChatGPT Health is engineered to function as a central hub for a user’s personal health information, securely integrating data from a wide variety of disparate sources. The system is designed to connect seamlessly with uploaded medical documents, such as historical records and complex lab results, allowing users to make sense of dense medical jargon. Beyond static documents, it can pull real-time data streams from popular fitness and wellness applications, including Apple Health, MyFitnessPal, Peloton, and AllTrails, to provide a dynamic view of physical activity and health metrics. The platform even extends its reach to analyze lifestyle patterns by connecting with services like Instacart, which can offer valuable insights into dietary habits. By consolidating this multifaceted information, the platform is able to deliver highly contextual, personalized responses that go far beyond generic health advice. Practical applications include translating a convoluted blood test result into plain, understandable language, identifying subtle long-term trends in wellness metrics that might otherwise go unnoticed, and proactively generating a list of pertinent questions for a user to ask their doctor during an upcoming appointment.

The global rollout of ChatGPT Health is planned to be a gradual and cautious process, beginning with a waitlist available to existing ChatGPT account holders to manage initial demand and ensure system stability. This phased approach also reflects the complex regulatory landscape surrounding health data. Notably, access will be initially restricted in the European Union, the UK, and Switzerland. This decision stems from the intricate and demanding process of resolving specific regulatory hurdles and achieving full data alignment with the distinct privacy frameworks and healthcare standards prevalent in those regions, such as the General Data Protection Regulation (GDPR). This careful, region-specific strategy underscores the company’s awareness of the immense responsibility involved in handling sensitive health information on an international scale and its commitment to meeting stringent legal and ethical requirements before making the service widely available. The initial focus remains on markets where a clear and compliant operational path can be established swiftly.

Navigating the Intersection of Technology and Medicine

Establishing Critical Boundaries

From the outset, OpenAI has been exceptionally clear and emphatic in its communication, carefully framing ChatGPT Health as a supportive assistant rather than a medical authority. The platform has been deliberately engineered with crucial limitations to ensure user safety and manage expectations responsibly. The company has made it explicit that the tool does not provide medical advice, it does not diagnose medical conditions, and it absolutely does not prescribe treatments or medications. This ethical framing is a vital component of the product’s design, intended to mitigate the significant risks associated with misinterpretation or over-reliance on AI for critical health decisions. It is positioned as a powerful resource to help users better understand their existing health data and prepare for more productive and informed conversations with their licensed healthcare providers. This distinction reinforces the irreplaceable value of professional medical expertise and is designed to prevent dangerous misuse, such as users attempting to self-diagnose serious conditions or delaying necessary professional care based on AI-generated information alone.

The primary function of the tool is therefore not to provide answers, but to help users formulate better questions. It is designed to act as a preparatory aid, empowering individuals by helping them organize, translate, and analyze their own information before they engage with a human doctor. By demystifying complex medical reports or highlighting trends in personal wellness data, ChatGPT Health can serve as a bridge between the patient and the healthcare system, fostering a more collaborative and efficient dynamic. The ultimate goal is to enhance the patient’s role in their own care, transforming them from a passive recipient of information into an active, engaged, and prepared participant. This careful positioning ensures that the final authority for diagnosis and treatment planning remains firmly and appropriately in the hands of qualified medical professionals, with the AI serving as a supplementary instrument to enrich, but not direct, the healthcare journey. This balance is critical to its ethical and practical success within the broader healthcare ecosystem.

Prioritizing Safety and Data Privacy

Recognizing the exceptionally high stakes of operating within the healthcare domain, the development of ChatGPT Health was underpinned by extensive medical oversight and the creation of a robust, multi-layered privacy architecture. Over a rigorous two-year development period, the project engaged more than 260 physicians from approximately 60 countries to review, refine, and stress-test the system’s responses. This global collaborative effort generated over 600,000 individual evaluation points, which focused not only on the technical accuracy of the medical information but also on the tone, clarity, and appropriateness of the AI’s guidance. A key area of this review was ensuring the system knew when to unequivocally and immediately direct a user to seek professional medical help. To systematize this complex evaluation process, OpenAI developed a proprietary internal tool called “HealthBench,” which scores AI-generated responses against a comprehensive set of clinician-defined standards for safety, correctness, and responsible communication, ensuring a consistent and high-quality user experience.

Privacy was treated as a foundational pillar of the platform’s design, not as an afterthought. ChatGPT Health operates within a completely separate, isolated digital environment inside the main application, creating a secure vault for a user’s most sensitive information. All health-related conversations, connected data sources, and uploaded files are kept entirely segregated from a user’s regular ChatGPT history. This health data is encrypted both in transit and at rest and, crucially, is not used to train any of OpenAI’s core language models, preventing any potential leakage or unintended use of personal health information for general model improvement. This strict architectural separation is a clear acknowledgment of the profound sensitivity of health data. In the United States, functionality is further enhanced through a strategic partnership with b.well Connected Health, a platform that allows users to grant explicit permission for the system to securely access and summarize their official electronic health records from a network of participating providers, adding another layer of verified data and utility.

Redefining the Patient-Provider Dynamic

Enhancing the Doctor’s Visit

A primary question surrounding the launch of a tool like ChatGPT Health is whether medical professionals should view it as a threat to their practice. The resounding consensus, reinforced by the platform’s careful design, is a firm “no.” Artificial intelligence, in its current form, cannot replace the core competencies that define a medical professional. It is incapable of performing a physical examination, exercising the nuanced clinical judgment honed over years of experience and training, or bearing the legal and ethical accountability that comes with patient care. Instead of being a replacement, the platform is positioned as a powerful tool that could positively alter the “starting point” of the crucial patient-doctor conversation. When patients arrive at appointments better informed about their own health data, aware of long-term trends, and prepared with a list of specific, relevant questions generated from their own information, consultations can become significantly more efficient, collaborative, and effective for both parties. This shift would allow medical professionals to spend less time on basic data interpretation and more time on high-level strategic thinking, complex decision-making, treatment planning, and the essential human aspects of care that technology cannot replicate.

The User’s Critical Role

The launch of ChatGPT Health ultimately represented a major formalization of the intersection between artificial intelligence and personal health management, born directly from a massive, user-driven demand for more accessible and immediate health information. Its long-term success and safety, however, hinged on a delicate and shared responsibility between OpenAI as the creator and the individuals who used it. While the tool held the profound potential to empower patients and streamline healthcare interactions in unprecedented ways, its misuse could have led to serious negative outcomes, such as reinforcing medical misinformation, causing undue anxiety, or dangerously delaying necessary medical treatment. The platform’s introduction was therefore met with a message of cautious optimism, emphasizing that its true value could only be realized if users remained vigilant. It was imperative that they continued to prioritize professional medical advice above all else, treating the AI-driven insights not as a final, authoritative answer, but as a supplementary input in their broader healthcare journey. This responsible partnership was the key to unlocking its benefits while mitigating its inherent risks.