Welcome to an insightful conversation with James Maitland, a renowned expert in robotics and IoT applications in medicine, who brings a unique perspective to the integration of artificial intelligence in healthcare. With a deep passion for advancing medical solutions through technology, James has been at the forefront of exploring how AI-ready data can revolutionize radiology. In this interview, we dive into the critical role of high-quality data in medical imaging, the process of preparing data for AI systems, the real-world impact on clinical workflows, and the challenges that still lie ahead. Join us as we uncover how structured data is paving the way for smarter, more precise patient care.

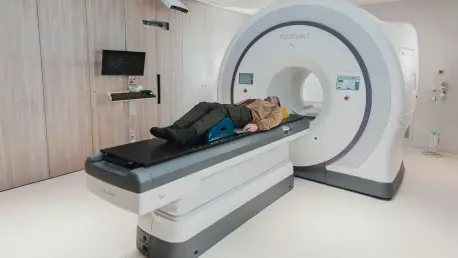

How would you describe the concept of AI-ready data in the field of radiology, and why does it matter so much?

AI-ready data in radiology refers to imaging studies and related information that are meticulously prepared and structured for artificial intelligence systems to use effectively. This means high-quality images, detailed annotations by expert radiologists, standardized formats like DICOM, and rich clinical context such as patient history. It matters because without this level of preparation, even the most advanced AI models can’t perform reliably. Poor data leads to inaccurate results, which in a field like radiology, can have serious consequences for patient diagnosis and treatment. It’s really the foundation for making AI a trusted tool in healthcare.

What are some of the essential ingredients that make data truly ready for AI applications in medical imaging?

There are several key components. First, the images need to be clear and free of artifacts, with consistent labeling. Then, you’ve got annotations—radiologists marking specific findings or regions of interest to give the AI a clear reference point. Standardization is huge; data needs to be in a uniform format to work across different systems. Metadata is another critical piece, tying in patient history and prior studies to give context. Lastly, the data must be de-identified to protect privacy while still being accessible for AI training. All these elements together ensure the AI can learn and function effectively.

Can you walk us through how high-quality, well-prepared data contributes to training accurate AI models for radiology?

Absolutely. AI models, especially in radiology, rely on machine learning algorithms that need vast amounts of diverse, well-annotated data to identify patterns—like spotting a subtle fracture or a potential tumor. High-quality data means the AI gets accurate examples to learn from, which directly translates to better precision in detecting abnormalities. If the data is messy or incomplete, the model might miss critical signs or flag false positives. So, the better the input, the more reliable the output, which ultimately supports radiologists in making confident diagnoses.

How does the use of standardized data improve the day-to-day workflow for radiologists in clinical settings?

Standardized data is a game-changer for workflow efficiency. When data is in consistent formats and integrates smoothly with systems like PACS or EHRs, it reduces the time radiologists spend wrestling with incompatible files or hunting down missing information. AI tools can quickly pull up relevant studies, prioritize urgent cases, and even suggest preliminary findings. This lets radiologists focus more on interpreting images and making decisions rather than getting bogged down by administrative hiccups. It’s all about streamlining the process so they can handle higher volumes without sacrificing accuracy.

What are some of the biggest challenges you’ve seen in dealing with data variability in radiology, and how can they be tackled?

Data variability is a huge hurdle. You’ve got images labeled inconsistently across different facilities, missing information in DICOM fields, or variations due to different equipment. This can confuse AI models and lead to unreliable results. Tackling this starts with establishing strict protocols for labeling and data entry, training staff to follow them, and using software to automatically flag inconsistencies. Another approach is developing algorithms that can normalize data from diverse sources. It’s not a quick fix, but creating a culture of consistency and investing in standardization tools can make a big difference.

Can you share a real-world example where AI-ready data has made a noticeable impact on efficiency or outcomes in radiology?

Certainly. I’ve seen cases where hospitals implemented robust data pipelines to ensure their imaging studies were AI-ready, and the results were striking. One facility reported a significant reduction in turnaround time for interpreting scans because the AI system, fed with high-quality, structured data, could prioritize urgent cases—like flagging potential strokes for immediate review. This not only sped up diagnosis but also improved patient outcomes by getting critical cases to the top of the list faster. It’s a clear example of how good data preparation directly translates to better care.

How do you balance the need to share large datasets for AI development with the critical importance of protecting patient privacy?

Balancing data sharing and privacy is tricky but doable. The key is robust de-identification processes—stripping out any personal information from the data so it can’t be traced back to an individual. Beyond that, secure data governance frameworks are essential, including encryption and access controls to limit who can see what. It’s also about building trust with patients by being transparent about how their data is used and ensuring compliance with regulations like HIPAA. With the right safeguards, we can share data for innovation while keeping privacy intact.

What role do radiologists play in the process of preparing AI-ready data, and why is their expertise so vital?

Radiologists are absolutely central to preparing AI-ready data, especially when it comes to annotation. They’re the ones who label images, pinpointing specific findings or abnormalities, and provide the diagnostic context that AI models learn from. Their expertise is vital because AI doesn’t inherently “know” what a lesion looks like—it needs that ground truth from skilled professionals to train on. Without their input, the data lacks the accuracy and depth needed for reliable AI performance. They’re not just users of these systems; they’re key to building them.

How do you see the long-term impact of AI-ready data on patient care within the field of radiology?

In the long term, AI-ready data has the potential to transform patient care in radiology by enabling faster, more accurate diagnoses and truly personalized treatment plans. With high-quality data, AI can help detect conditions earlier, reduce diagnostic errors, and even predict outcomes based on patterns in patient history. This means patients get interventions sooner, and radiologists can manage growing caseloads without burnout. Ultimately, it’s about creating a synergy between technology and human expertise to deliver care that’s not just efficient but also more precise and tailored to each individual.

What is your forecast for the future of AI-ready data in radiology over the next decade?

I’m optimistic about the next decade. I think we’ll see even greater strides in data standardization and integration, with more automated tools to curate and maintain AI-ready datasets. Advances in privacy-preserving technologies, like federated learning, will make data sharing safer and more widespread, fueling AI innovation. We’re also likely to see AI models becoming more adaptive, learning continuously from real-world data to stay relevant. My hope is that within ten years, AI-ready data will be the norm, not the exception, making radiology a field where technology and clinicians work hand-in-hand to push the boundaries of what’s possible in patient care.