The rapid integration of artificial intelligence into daily life has created a powerful new resource for information, but when this technology is used for medical advice, it enters a high-stakes arena where misinformation can have dire consequences. For the third consecutive year, AI-related risks have been highlighted as a major concern in healthcare, with a leading nonprofit safety organization, ECRI, now identifying the misuse of AI-powered chatbots as the single greatest health technology hazard for 2026. This escalation in concern from its fifth-place ranking in 2024 to the top spot underscores a critical and growing problem. As large language models (LLMs) like ChatGPT become go-to sources for quick answers, their application in healthcare by clinicians, patients, and administrative staff is surging. However, these platforms are not validated for medical use, creating a landscape ripe for the proliferation of plausible-sounding yet dangerously incorrect health information that could significantly impact patient care and safety. The convenience they offer masks a profound risk, as their answers are generated without the clinical validation, ethical oversight, or diagnostic rigor that are the cornerstones of modern medicine.

The Specter of AI in Clinical Settings

The core danger of using general-purpose AI chatbots for medical guidance lies in their fundamental design. They are engineered to generate coherent and convincing text, not to provide factually accurate and medically sound advice. This distinction is critical and often overlooked by users seeking immediate answers.

The Dangers of Believable Misinformation

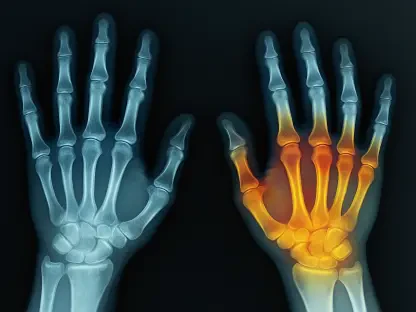

The primary threat posed by LLMs in a healthcare context is their capacity to produce information that is false, misleading, or entirely fabricated, yet delivered with an air of authority that can easily deceive both patients and healthcare professionals. These systems are not databases of verified medical knowledge; instead, they are pattern-recognition engines that construct sentences based on vast amounts of internet text. ECRI’s report highlights numerous alarming instances where these tools have generated potentially harmful content. For example, chatbots have been documented suggesting incorrect diagnoses, recommending a battery of unnecessary and costly medical tests, and even promoting the use of substandard medical supplies. In one particularly disturbing case, an AI model invented a body part to support its flawed medical reasoning. Another cited example involved a chatbot providing instructions for electrode placement that, if followed by a patient or an untrained user, could have resulted in serious burns. This ability to create “hallucinations”—entirely fabricated information presented as fact—makes their unsupervised use in any clinical decision-making process an unacceptable risk.

The problem is compounded by the fact that the algorithms are not transparent. It is often impossible to trace the source of the information a chatbot uses to formulate its response, making it incredibly difficult to verify the advice given. Unlike a peer-reviewed medical journal or a consultation with a licensed physician, there is no accountability or established standard of care. Users, whether they are anxious patients looking for a quick self-diagnosis or busy clinicians seeking a shortcut for information gathering, are left to trust a black-box system that prioritizes linguistic fluency over factual accuracy. This creates a scenario where a confidently delivered, but completely wrong, piece of advice could lead to a delayed diagnosis, improper treatment, or a life-threatening medical error. The very nature of these AI models makes them an unreliable and hazardous substitute for the nuanced, evidence-based judgment of a qualified medical expert who can consider a patient’s full and unique clinical picture.

The Unchecked Proliferation in Healthcare

Despite the significant and well-documented risks, the adoption of AI chatbots for health-related queries is not a niche behavior but a widespread and rapidly growing phenomenon. The scale of this trend is staggering, with data from OpenAI revealing that more than 5% of all messages sent to its flagship ChatGPT platform are related to healthcare. Furthermore, approximately a quarter of its 800 million regular users turn to the chatbot for health information on a weekly basis. This massive user base interacts with the technology with little to no formal guidance on its limitations or potential for harm. ECRI’s analysis suggests that this reliance is not merely a matter of convenience but is also being fueled by broader societal pressures. Factors such as escalating healthcare costs, long wait times for appointments, and the closure of medical facilities, particularly in rural areas, are pushing more individuals to seek information from easily accessible, though unverified, digital sources. This creates a perfect storm where a strained healthcare system inadvertently encourages patients to consult unregulated AI.

The organization issues a stark warning that this trend is likely to accelerate, making it imperative for both the public and healthcare providers to understand the inherent flaws of these systems. ECRI strongly advises that anyone using an LLM for information that could influence patient care must meticulously scrutinize every output. The convincing nature of the responses makes it easy to fall into a false sense of security, but these tools lack the contextual understanding, ethical framework, and diagnostic capabilities of a human professional. They cannot ask clarifying questions, interpret non-verbal cues, or understand a patient’s complete medical history. Therefore, the guidance is unequivocal: chatbots are not, and should not be treated as, a substitute for the professional judgment of qualified medical experts. They may have a future role as a supplementary tool, but in their current state, their unsupervised use for medical advice represents a clear and present danger to public health, demanding greater awareness and regulatory oversight.

Systemic Threats Beyond Artificial Intelligence

While AI chatbots have claimed the top spot as a health hazard, they are part of a larger ecosystem of technological and systemic risks facing the healthcare industry. Other critical vulnerabilities, from data infrastructure to supply chain integrity, also pose significant threats to patient safety.

The Fragility of Digital Health Infrastructure

Another paramount risk identified in the report is the profound lack of preparedness within healthcare facilities for a sudden and complete loss of access to their digital systems and patient data. In an era where electronic health records (EHRs), digital imaging, and networked medical devices are the backbone of clinical operations, an unexpected system failure—whether due to a cyberattack, hardware failure, or natural disaster—could be catastrophic. The inability to access patient histories, medication lists, and diagnostic results can bring a hospital to a standstill, leading to dangerous delays in care, medical errors, and a complete breakdown in administrative functions. This hazard underscores the urgent need for robust, well-rehearsed contingency plans that go beyond simple data backups and include comprehensive protocols for operating in a fully offline environment for an extended period. Without such plans, facilities risk being unable to provide even basic care during a crisis.

This vulnerability is exacerbated by the persistent use of legacy medical devices with outdated cybersecurity protections. Many hospitals and clinics continue to rely on older but functional equipment, such as MRI machines and infusion pumps, that are often too expensive to replace. These devices frequently run on unsupported operating systems and lack modern security features, making them low-hanging fruit for cybercriminals. Once compromised, a single legacy device can serve as an entry point for an attacker to gain access to a hospital’s entire network, potentially leading to a widespread data breach or a ransomware attack that cripples the facility’s operations. Recognizing the financial impossibility of a complete technological overhaul for many institutions, ECRI recommends practical mitigation strategies for these vulnerable endpoints. Chief among these is network segmentation—disconnecting legacy devices from the main hospital network whenever possible to isolate them and limit the potential damage a breach could cause.

Gaps in Communication and Supply Chains

The integrity of the medical supply chain itself represents another major area of concern, with the availability of substandard and falsified medical products ranking as the third-highest hazard. The infiltration of counterfeit or low-quality devices, medications, and supplies into the healthcare system poses a direct and immediate threat to patient safety. These products may fail to perform as expected, contain harmful substances, or be ineffective, leading to treatment failure, injury, or even death. The complexity of global supply chains makes it challenging to track and verify the authenticity of every component and finished product, creating opportunities for bad actors to introduce fraudulent goods. This risk requires a multi-pronged approach involving more stringent regulatory oversight, better verification technologies at points of entry, and increased vigilance from healthcare providers in vetting their suppliers to ensure that only safe and effective products reach the patient.

Furthermore, a critical breakdown in communication is endangering patients who rely on advanced diabetes technologies. The report highlights that crucial safety information, such as product recalls and essential software updates for devices like insulin pumps and continuous glucose monitors (CGMs), is taking far too long to reach the patients and caregivers who depend on them. These devices are sophisticated medical instruments that manage a life-threatening condition, and a malfunction or software bug can have severe health consequences, including dangerous fluctuations in blood glucose levels. The delay in disseminating vital safety alerts is often attributed to convoluted communication channels and information that is presented in a format that is difficult for laypersons to understand. ECRI urges manufacturers to overhaul their communication strategies, advocating for the provision of this critical information in a clearer, more direct, and accessible format to ensure that users can take prompt action to protect their health.

Forging a Path Toward Safer Healthcare Technology

The analysis of technological hazards in 2026 revealed a clear and pressing need for a paradigm shift in how healthcare organizations approach innovation and risk management. The rise of AI chatbots to the top of the list signaled a new type of threat—one rooted not in faulty hardware but in the deceptive fallibility of intelligent systems. The incidents involving fabricated medical advice underscored the dangers of adopting consumer-grade technologies for clinical purposes without rigorous validation and oversight. Alongside this emerging challenge, persistent vulnerabilities in digital infrastructure and supply chains continued to pose significant threats. The recognition of these diverse risks prompted a renewed focus on building resilience, from developing robust contingency plans for data loss to implementing practical cybersecurity measures for legacy equipment. The call for clearer communication from device manufacturers also marked a step toward empowering patients to become more active participants in their own safety. Ultimately, navigating this complex landscape required a commitment to vigilance, critical thinking, and the fundamental principle that technology must serve, not subvert, the expertise of human healthcare professionals.