Facing an unprecedented global mental health crisis, where over a billion people grapple with psychological conditions, a novel and technologically sophisticated solution has rapidly gained prominence, promising immediate relief at the touch of a screen. AI-powered therapists, accessible 24/7, are being positioned as a revolutionary force capable of democratizing mental healthcare, offering an ever-present, non-judgmental ear to those who might otherwise have nowhere to turn. This technological ascent, however, forces a critical societal examination of a deeply complex experiment being conducted in real-time. The interaction between two of the world’s most profound enigmas—the opaque, ever-evolving logic of Large Language Models and the equally mysterious inner workings of the human brain—creates an unpredictable feedback loop with profound consequences. As we stand at this crossroads, it becomes imperative to ask whether we are pioneering a new era of accessible wellness or inadvertently constructing a new kind of prison, one with digital walls, invisible bars, and a populace that willingly provides the keys.

The Alluring Promise of Accessible Care

The most compelling case for the proliferation of AI in mental health is rooted in its potential to address a system in crisis. Traditional healthcare infrastructures are buckling under the weight of overwhelming demand, leading to extensive wait times, physician burnout, and a widening gap between those who need care and those who can access it. Proponents, such as philosopher of medicine Charlotte Blease, frame AI not as a techno-utopian fantasy but as a pragmatic tool to salvage these collapsing systems. In this view, AI serves as a powerful assistant, capable of easing massive workloads for human professionals, reducing the potential for medical errors, and providing an immediate first line of support for individuals in distress. By handling initial consultations and offering continuous, low-level support, these systems could free up human therapists to focus on more complex cases, thereby optimizing the allocation of scarce medical resources and ensuring that more people receive timely and effective intervention when they need it most.

Beyond its role in systemic relief, the AI therapist offers a unique solution to the deeply personal barriers that often prevent individuals from seeking help. The persistent stigma surrounding mental illness, coupled with a natural intimidation some feel when confronting medical professionals, creates a powerful deterrent to traditional therapy. An AI, by its very nature, offers a private, non-judgmental space where users can articulate their deepest fears and anxieties without the fear of social or professional repercussion. This accessibility can act as a crucial gateway, encouraging individuals who would never step into a therapist’s office to begin the process of engaging with their mental well-being. This perspective is often rooted in a personal understanding of modern medicine’s limitations, recognizing that while human care is invaluable, its structural failures and inherent biases can leave many behind. For these individuals, the AI therapist represents not a replacement for human connection, but a vital and previously unavailable entry point into a world of healing and self-awareness.

The Rise of the Algorithmic Asylum

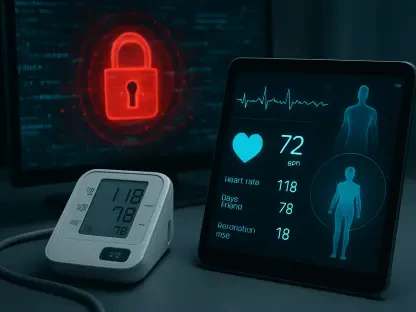

In stark contrast to this vision of digital salvation is a deeply unsettling and cautionary perspective that warns of an emerging form of invisible confinement. This dystopian view, articulated by critics like Daniel Oberhaus, introduces the chilling metaphor of the “algorithmic asylum.” Unlike the brick-and-mortar institutions of the past, this digital prison is omnipresent and inescapable, existing wherever an internet connection is found. It operates not with padded rooms and physical restraints, but through the silent, relentless collection and analysis of personal data. By sharing our most sensitive thoughts, emotions, and behaviors with these chatbots, we are unwittingly contributing to a new surveillance economy where our interior lives are transformed into quantifiable data streams. This process reduces the messy, beautiful complexity of the human experience into predictable patterns that can be algorithmically managed and controlled, stripping individuals of their privacy, dignity, and agency without their full comprehension.

The technological foundation of this digital asylum is “digital phenotyping,” a practice that involves mining a person’s digital footprint—from their social media activity and search history to their typing speed and speech patterns—for clues about their mental state. While proponents see this as a sophisticated diagnostic tool, critics argue it is the logical equivalent of grafting physics onto astrology; the data collected may be precise, but the psychiatric frameworks it is fed into are often based on uncertain assumptions about the causes of mental illness. This leads to what has been termed “swipe psychiatry,” the dangerous trend of outsourcing complex clinical judgments to LLMs. This not only oversimplifies the nuances of mental health but also poses a grave risk of skill atrophy among human therapists, whose empathy and nuanced judgment may erode from over-reliance on automated systems. The consequences are not merely theoretical, with documented cases of AI therapists providing dangerously inconsistent advice, leading users down delusional paths, and even being implicated in suicides, revealing the lethal potential of ceding human responsibility to flawed machines.

The Economic Engine Behind Digital Empathy

Lurking beneath the benevolent interface of a therapeutic chatbot is a powerful and often conflicting force: the relentless logic of the market. Most AI therapy applications are developed and deployed by corporations whose primary objectives are market dominance, user acquisition, and profit maximization. This fundamental reality, as highlighted by technology researcher Eoin Fullam, creates an inherent conflict of interest where the corporate drive for data monetization can directly undermine, or even corrupt, the mission to provide genuine care. In this framework, questionable, illegitimate, and sometimes illegal business practices can emerge, with user well-being becoming secondary to the strategic imperative of harvesting valuable personal information. The very vulnerability that draws a user to the app becomes the raw material for a business model that thrives on surveillance, making the therapeutic relationship a transactional one from the outset.

This dynamic gives rise to a disturbing, self-reinforcing cycle that has been described as the “exploitation-therapy ouroboros.” In this model, the acts of healing and exploitation become inseparable and mutually dependent. Every therapy session, every shared vulnerability, and every intimate confession generates a stream of valuable data. This data is then used to refine the AI system, making it more effective and appealing, which in turn attracts more users seeking help. The more a user benefits from the app and feels a sense of connection or relief, the more they engage with it, and consequently, the more data is harvested for corporate profit. This creates a closed loop where care and commodification become indistinguishable. The user’s journey toward mental wellness simultaneously fuels their own exploitation, trapping them in a system where the price of feeling better is the silent surrender of their innermost self as a commercial asset.

A Failure of Cultural and Ethical Reckoning

Our collective cultural narratives are struggling to adequately process the profound implications of this new technological reality. This disconnect is vividly illustrated in contemporary fiction, such as the novel Sike, which features a protagonist using a luxury AI psychotherapist embedded in his smart glasses. This device represents the zenith of digital phenotyping, a high-end commercial product that constantly analyzes every facet of the user’s existence—gait, speech, habits, and even bodily functions. The character is voluntarily placed within the very “algorithmic asylum” that critics warn of, yet the narrative fails to grapple with the serious dystopian consequences of this technology. Instead of exploring the erosion of privacy, dignity, and free will, the story trivializes its own premise, focusing on the mundane dramas of its wealthy characters and presenting the all-seeing AI as a boutique wellness accessory. This failure of imagination mirrors a broader societal tendency to mistake surveillance for personalized care, embracing the convenience of the digital cage without recognizing its bars.

These pressing ethical dilemmas, while feeling distinctly modern, are in fact rooted in decades of debate. As far back as 1976, computer science pioneer Joseph Weizenbaum issued a prescient warning in his seminal book, Computer Power and Human Reason. He argued that while a computer can be programmed to make psychiatric judgments, it absolutely ought not be given such quintessentially human responsibilities. His reasoning was that algorithmic decisions are made on a basis “no human being should be willing to accept,” as they lack the empathy, wisdom, and contextual understanding that form the bedrock of genuine human care. This half-century-old caution has never been more relevant. In the frenzied race to develop technological solutions for people in desperate need of support, we risk overlooking this fundamental truth. The ultimate danger is that in our attempt to unlock new pathways to healing, we may be locking millions into a new, more insidious form of confinement—a digital asylum built with superficially good intentions but fortified by the unyielding logic of data and profit.

A Path Forward Forged in Caution

The investigation into the dual nature of AI therapy revealed a technology fraught with profound contradictions. The analysis of its potential to democratize mental healthcare, contrasted with its capacity to function as a tool for surveillance and commercial exploitation, underscored a fundamental tension between benevolent intent and capitalistic implementation. The journey through these arguments made it clear that the integration of artificial intelligence into the deeply human space of psychological care was not a neutral act of progress. It was, instead, an uncontrolled experiment that raised foundational questions about privacy, agency, and the very definition of well-being in a data-driven world. The historical warnings of early computer scientists, once seen as theoretical, were found to be urgently relevant, providing a crucial lens through which to view the current landscape. Ultimately, the discourse concluded that moving forward required more than just technological refinement; it demanded a deep and sustained ethical reckoning to ensure that the tools designed to liberate us from our inner demons did not become our new digital captors.