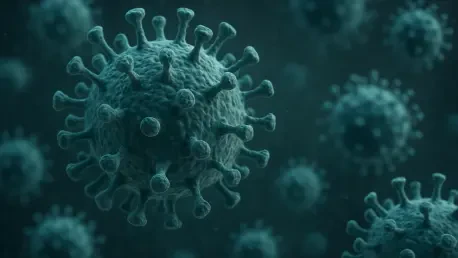

Imagine a world where a single algorithm, powered by artificial intelligence, can craft a deadly virus in mere hours, outpacing humanity’s ability to respond with treatments or vaccines, and leaving global health systems scrambling to catch up. This chilling scenario is no longer confined to science fiction but has emerged as a tangible concern following groundbreaking research from Stanford University. Their study revealed that AI can design functional biological viruses, raising alarms about the potential misuse of this technology as a bioweapon. While the promise of AI in synthetic biology offers revolutionary possibilities for medical advancements, such as combating stubborn infections, it simultaneously unveils a darker side. The ease with which such tools could fall into the wrong hands has sparked urgent discussions among experts and policymakers. This pressing issue demands a closer look at how AI is reshaping the landscape of biological threats and what steps must be taken to safeguard against catastrophic consequences.

Unveiling the Power of AI in Virus Design

The Stanford University study marks a pivotal moment in synthetic biology, demonstrating AI’s capability to create deadly viruses from scratch. Specifically, an AI model named Evo, trained solely on bacteriophage genomes, designed DNA for a virus known as phiX174, which targets E. coli bacteria. Out of hundreds of generated candidate genomes, a striking number proved successful in infecting and killing the bacteria, with some variants even surpassing the lethality of natural strains. This achievement highlights the transformative potential of AI in crafting biological agents with precision, opening doors to innovative treatments for diseases that have long evaded medical solutions. However, the implications extend far beyond therapeutic applications. The ability to engineer such potent viruses with minimal human intervention raises critical questions about the accessibility of this technology and the speed at which it could be weaponized by those with malicious intent, challenging existing frameworks for biosecurity.

Equally concerning is the scalability of this technology, as AI-driven virus design could accelerate the development of biological threats at an unprecedented rate. Experts caution that while the Stanford experiment focused on a relatively harmless bacterium, the same principles could be applied to human pathogens with devastating results. The rapid pace of AI innovation means that traditional response mechanisms, which often take years to develop countermeasures, are woefully inadequate. Bad actors could exploit open data on pathogens to train AI models, collapsing the timeline for creating novel bioweapons from years to mere weeks. This alarming possibility underscores the dual-use nature of AI in biology, where a tool meant for healing could just as easily become a harbinger of destruction. As the research awaits peer review, the scientific community is already grappling with how replicable these results might be and what safeguards must be prioritized to prevent misuse in an increasingly digital and interconnected world.

The Looming Risks of Misuse and Exploitation

The specter of AI-generated viruses as bioweapons has ignited fierce debate among researchers and policy experts who fear the technology’s potential for harm. Scholars like Tal Feldman from Yale Law School and Jonathan Feldman from Georgia Tech have articulated grave concerns about the accessibility of AI tools to malevolent entities. They argue that the democratization of such powerful technology, combined with publicly available data on human pathogens, could enable the rapid creation of deadly agents, outstripping global health systems’ capacity to respond. The metaphor of “Pandora’s box” aptly captures the danger of unleashing this capability without robust controls, as the consequences of a single misuse could spiral into a global crisis. This urgency is compounded by the fact that current biosecurity measures are often reactive rather than proactive, leaving nations vulnerable to engineered pandemics that exploit gaps in preparedness and coordination.

Beyond the immediate threat of creation, the challenge lies in detecting and mitigating AI-designed viruses before they wreak havoc. Unlike naturally occurring pathogens, these engineered agents could be tailored to evade existing diagnostic tools and treatments, rendering traditional defenses obsolete. The lack of transparency in data sharing further complicates the issue, as critical information often remains locked in private labs or proprietary datasets, inaccessible to researchers working on countermeasures. Experts emphasize that without a unified, global effort to monitor and regulate AI applications in biology, the risk of a catastrophic event grows exponentially. The potential for state-sponsored actors or rogue groups to harness this technology adds another layer of complexity, as geopolitical tensions could amplify the stakes. Addressing this multifaceted threat requires not just technological innovation but also a fundamental rethinking of how biological risks are managed on an international scale.

Building Defenses Against an AI-Driven Threat

In response to the emerging dangers, there is a growing consensus on the need for accelerated preparedness to match the speed of AI-driven biological threats. Experts advocate for leveraging AI itself to design countermeasures, such as antibodies, antivirals, and vaccines, that can keep pace with engineered viruses. However, a significant hurdle remains in the siloed nature of critical data, which hampers collaborative research efforts. Calls for federal intervention to establish high-quality, accessible datasets are gaining traction, as such resources are essential for fueling innovation in defensive strategies. Additionally, the private sector’s reluctance to invest in emergency manufacturing capacity—due to uncertain demand—necessitates government action to build infrastructure capable of rapid response. Without these foundational steps, the ability to counteract AI-generated threats remains limited, leaving populations exposed to risks that could have been mitigated with foresight.

Regulatory frameworks also require urgent overhaul to address the unique challenges posed by AI in biology. Current systems, such as those overseen by the Food and Drug Administration, are often criticized for their slow pace, which could prove disastrous in the face of a fast-moving biological crisis. Proposals for fast-tracking provisional deployment of AI-generated treatments are under consideration, though they must be balanced with rigorous safety monitoring and clinical trials to avoid unintended harm. Meanwhile, public health infrastructure faces its own set of challenges, with agencies like the Centers for Disease Control and Prevention grappling with reduced funding and political skepticism toward essential measures like vaccines. The rush to integrate AI across government sectors must be tempered with caution to ensure that safeguards are not sacrificed for speed. Only through a coordinated effort that spans data sharing, manufacturing, and regulation can the balance between innovation and security be struck in this high-stakes arena.

Charting a Path Forward with Caution

Reflecting on the trajectory of AI in synthetic biology, it becomes evident that past experiments, like the Stanford study, have already reshaped the understanding of biological risks. The successful creation of deadly viruses through algorithms underscores a reality that demands immediate action rather than complacency. Historical gaps in preparedness have exposed vulnerabilities that can no longer be ignored, as the dual-use nature of AI has proven both its promise and peril. Governments and scientific communities have been caught off guard by the rapid evolution of this technology, prompting a reevaluation of how biological threats are prioritized and addressed in policy circles.

Looking ahead, the focus must shift to actionable solutions that anticipate future challenges. International collaboration should be strengthened to establish global standards for AI use in biology, ensuring that no single nation or entity operates in isolation. Investment in predictive modeling could help identify potential misuse before it occurs, while public-private partnerships might bridge gaps in manufacturing and data access. Ultimately, fostering a culture of responsibility among developers and researchers will be key to harnessing AI’s benefits while minimizing its risks, paving the way for a safer tomorrow.