The integration of artificial intelligence into the modern workplace has been widely discussed through the lens of efficiency and job displacement, but a far more subtle and immediate challenge is emerging from the shadows of automation. As AI systems become less of a tool and more of a digital collaborator, recent research from a team at Microsoft and Imperial College London reveals that the most significant impact may not be on a company’s bottom line, but on the psychological well-being of its employees. While AI promises to alleviate the burden of monotonous tasks and streamline processes like accessing health support, it simultaneously introduces a new class of occupational stressors. This duality is creating an environment where the very technology designed to help workers could become a source of unacknowledged strain, cognitive overload, and profound job-related anxiety, demanding an urgent re-evaluation of how organizations manage the human-AI interface.

The Shifting Landscape of Workplace Stress

The Emergence of Unseen Burdens

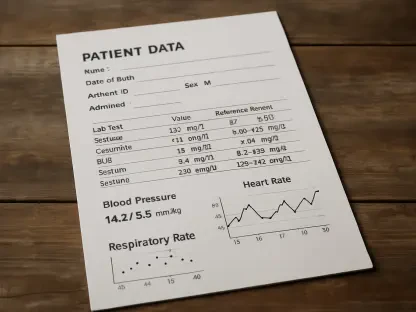

As artificial intelligence systems absorb an increasing number of routine and predictable tasks, the nature of human work is undergoing a fundamental transformation that carries significant psychological weight. Employees are being repositioned from performers of tasks to managers of complexity, stewards of strategy, and providers of nuanced emotional labor. This evolution introduces what researchers have termed “hidden workloads,” a pervasive and often unquantified form of mental strain. For instance, an employee may now be responsible for supervising the output of multiple AI agents simultaneously. While this role sounds supervisory, it often lacks formal definition, clear metrics for success, or acknowledgment within a job description. This unformalized responsibility becomes an invisible layer of pressure, as the employee must constantly monitor, validate, and correct autonomous systems. The very automation intended to free up human capacity can, paradoxically, create an overwhelming and perpetual cognitive burden that negates any perceived benefits, leading to burnout and mental exhaustion that is difficult to diagnose because the work itself is not officially recognized.

The psychological demands of this new work paradigm extend beyond simple oversight into deeper cognitive and emotional realms. With mundane tasks automated, the remaining human responsibilities are almost exclusively high-stakes, requiring critical thinking, creative problem-solving, and sophisticated interpersonal skills. This shift eliminates the natural mental breaks that routine work once provided, forcing employees to operate at a high level of cognitive engagement for sustained periods. Furthermore, the increased emphasis on emotional labor—managing client relationships, resolving complex team conflicts, and providing empathetic leadership—is an emotionally draining responsibility that AI cannot replicate. This constant demand to perform complex cognitive and emotional functions, coupled with the invisible stress of supervising AI, creates a potent recipe for chronic stress. Organizations must recognize that transitioning work to AI is not a simple offloading of tasks but a complete restructuring of the psychological contract with their employees, requiring new support systems to manage these intensified mental demands.

Confronting Technological Flaws and Role Confusion

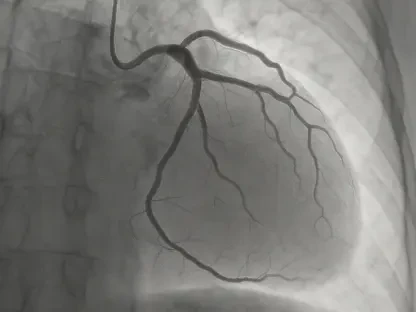

A significant and growing source of stress for employees working alongside AI is the phenomenon of “hallucination,” where the technology confidently generates inaccurate, misleading, or entirely fabricated information. The critical responsibility of identifying and correcting these falsehoods falls entirely on the human supervisor, creating a high-pressure environment of constant vigilance. This task is far from trivial; AI-generated errors are often subtle and embedded within otherwise accurate content, making them difficult to detect. As these systems become more autonomous and their reasoning processes more opaque, the challenge of spotting these errors is expected to intensify, placing an immense psychological burden on workers. The fear of missing a critical flaw that could lead to significant financial, reputational, or operational damage fosters a state of heightened anxiety. This dynamic transforms the human-AI relationship from one of collaboration to one of cautious, and often stressful, oversight, where the human is perpetually on guard against the machine’s potential failures.

This evolving dynamic inevitably leads to a state of “role ambiguity,” a well-documented psychological stressor that erodes job satisfaction and mental well-being. As AI’s capabilities expand and begin to overlap with tasks previously considered exclusively human, the lines of responsibility become increasingly blurred. Employees may find themselves questioning their purpose, value, and future within an organization where their digital counterparts are constantly learning and improving. This uncertainty is not a temporary adjustment phase but a persistent feature of the integrated workplace, leading to feelings of insecurity and anxiety about career longevity. When workers are unclear about what is expected of them versus their AI collaborators, it becomes difficult to measure their own performance or feel a sense of accomplishment. This lack of clarity undermines an employee’s sense of agency and control, two factors that are critical for maintaining positive mental health in any professional setting. The ongoing ambiguity requires a proactive management approach to clearly define and communicate the distinct and complementary roles of humans and AI.

Building a Resilient Human-AI Partnership

Redefining Job Roles for a New Era

To mitigate the mental health risks associated with AI integration, organizations must move beyond mere technological deployment and engage in a thoughtful redesign of job roles and responsibilities. The first critical step is to make the “hidden workloads” of AI supervision visible and quantifiable. This involves formally incorporating these duties into job descriptions, establishing clear performance metrics that account for the cognitive load of managing AI, and allocating appropriate time and resources for these tasks. By officially recognizing AI oversight as a core competency, companies can validate the effort it requires and provide a framework for evaluating and rewarding it. Furthermore, training programs must evolve to address not only the technical aspects of using AI tools but also the psychological challenges that accompany them. Workshops on managing ambiguity, developing critical evaluation skills for AI-generated content, and coping with the pressure of high-stakes error detection can equip employees with the resilience needed to thrive in this new environment.

Creating a psychologically safe culture is equally crucial for fostering a healthy human-AI interface. Employees must feel empowered to question, challenge, and even override AI-generated outputs without fear of negative consequences. This requires establishing clear protocols that designate the human as the final authority in the decision-making process, thereby reinforcing their sense of agency and control. Management plays a key role in promoting this culture by encouraging open dialogue about the limitations and failures of AI systems and by celebrating the uniquely human skills—such as intuition, ethical judgment, and contextual understanding—that complement the technology. When employees are positioned as essential arbiters rather than passive monitors, their role is elevated, and the sense of being controlled by the technology diminishes. This shift in perspective helps to reduce role ambiguity and reinforces the value of human expertise, which is fundamental to preserving morale and mental well-being in an increasingly automated workplace.

Charting the Future of Occupational Health

The proactive management of the human-AI relationship was recognized as the next critical frontier for occupational health. The successful integration of this transformative technology hinged not on its computational power but on an organization’s ability to understand and address the novel psychological pressures it introduced. It became clear that simply deploying AI for efficiency gains without considering the human cost was a shortsighted strategy that ultimately undermined both employee well-being and long-term productivity. The discourse shifted from a narrow focus on job replacement to a more nuanced understanding of job transformation, acknowledging that new kinds of workloads and stressors required new forms of support. Organizations that thrived were those that immediately began preparing for this workplace evolution by investing in mental health resources, redesigning roles with human-centric principles, and fostering a culture of open communication about the challenges of working with autonomous systems. This forward-thinking approach ensured that the benefits of AI were realized without sacrificing the mental health of the workforce that managed it.